Providing application teams with a self-service way of deploying applications and their dependencies often means that platform administrators have to hide the implementation details of the platform via simple to consume APIs. In the case of Kubernetes this usually means having to deploy Custom Resource Definition that are either 3rd party or custom built in-house. These CRD’s in addition to allowing Kubernetes users to manage non-Kubernetes objects via the Kubernetes Resources Model (Aka YAML file), they also allow abstracting away the details of how some resources get created and managed.

Let’s take a hypothetical example of a CRD that allows users to deploy a Cloud hosted Database. A developer might define an object this way:

apiVersion: apps/v1

kind: CloudDatabase

metadata:

name: my-database

spec:

replicas: 1

selector:

matchLabels:

app: my-database

spec:

version: 1.3

port: 4568

This object follows a specific schema defined in the CRD and will trigger a controller (part of the CRD magic of Kubernetes) to provision the Cloud Hosted DB and manage its lifecycle.

Now this is all great until you realize an application stack is usually more than just a frontend, backend, and DB. It usually includes things like monitoring and logging configs, queue messaging systems, blob storage, load balancers, policies, and more. This is where YAML nightmare starts, as a developer trying to deploy a fully functioning application, you will have to understand, write, maintain, and troubleshoot all these YAML files. Tools like Kustomize and Helm typically help with templating and re-using 3rd party deployment manifests, but they don’t give full customization flexibility to the end user.

This is where the resources composition concept of Crossplane becomes an interesting option. Compositions is a feature in Crossplane that allows platform admins to create high-level abstractions and make them available inside Kubernetes. The end users (usually developers) can then “Instantiate” these compositions by creating an object using the schema of the composition, the controller associated with compositions then generates and manages the lifecycle of the low level components. The only caveat is that crossplane compositions only support non-kubernetes objects.

Up until now Kubernetes lacked a native way to support the same concept of compositions for any type of object. This is where KRO comes into the picture.

Enter Kubernetes Resource Orchestrator

KRO (Kubernetes Resource Orchestrator) is a Kubernetes-native, open-source project that simplifies how you deploy and manage applications on Kubernetes. It lets you create custom APIs to manage groups of resources as a single unit. Instead of developers dealing with the complexities of individual Kubernetes objects, KRO allows platform teams to build higher-level APIs that encapsulate everything needed to run an application.

Before we go into the details of how KRO works under the hood. Let's take a look at a simple example. Assume a developer needs to deploy a web application to Kubernetes and expose it to the internet behind a load balancer. You will have to define 3 different objects: A Deployment, a Service, and an Ingress:

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-app

spec:

replicas: 1

selector:

matchLabels:

app: sample-app

template:

metadata:

labels:

app: sample-app

spec:

containers:

- name: sample-app

image: nginx:latest

ports:

- containerPort: 80apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example-ingress

spec:

rules:

- host: example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: web-service

port:

number: 80

NB: This is a very simple example. Typically Kubernetes manifests for web apps are more complex than this and might include Secrets, ConfigMaps, custom healthCheck configs, policies…

KRO introduces a CRD called ResourceGraphDefinition. It allows admins to define a new API Object, its schema (what fields will be needed) and its compositions (what low-level objects it manages). Using our example above, we can define an objects called my-application this way:

apiVersion: kro.run/v1alpha1

kind: ResourceGraphDefinition

metadata:

name: my-application

spec:

# kro uses this simple schema to create your CRD schema and apply it

# The schema defines what users can provide when they instantiate the RGD (create an instance).

schema:

apiVersion: v1alpha1

kind: Application

spec:

# Spec fields that users can provide.

name: string

image: string | default="nginx"

port: integer | default=80

ingress:

enabled: boolean | default=false

status:

# Fields the controller will inject into instances status.

deploymentConditions: ${deployment.status.conditions}

availableReplicas: ${deployment.status.availableReplicas}

# Define the resources this API will manage.

resources:

- id: deployment

template:

apiVersion: apps/v1

kind: Deployment

metadata:

name: ${schema.spec.name} # Use the name provided by user

spec:

replicas: 3

selector:

matchLabels:

app: ${schema.spec.name}

template:

metadata:

labels:

app: ${schema.spec.name}

spec:

containers:

- name: ${schema.spec.name}

image: ${schema.spec.image} # Use the image provided by user

ports:

- containerPort: ${schema.spec.port}

- id: service

template:

apiVersion: v1

kind: Service

metadata:

name: ${schema.spec.name}-service

spec:

selector: ${deployment.spec.selector.matchLabels} # Use the deployment selector

ports:

- protocol: TCP

port: ${schema.spec.port}

targetPort: ${schema.spec.port}

- id: ingress

includeWhen:

- ${schema.spec.ingress.enabled} # Only include if the user wants to create an Ingress

template:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ${schema.spec.name}-ingress

spec:

rules:

- http:

paths:

- path: "/"

pathType: Prefix

backend:

service:

name: ${service.metadata.name} # Use the service name

port:

number: ${service.spec.ports[0].port}

Note that this ResourceGraphDefinition contains 3 important sections:

- The Metadata section which contains the name of the ResourceGraphDefinition

- The schema section which declares the fields this ResourceGraphDefinition object has. In this example our my-application object has fields like name, image, port, ingress. Each of these fields has a type (string, integer or boolean) and some of them have default values. These fields are the values that end users who will instantiate this object will have to set.

- The resources section which defines the low-level Kubernetes objects managed by this ResourceGraphDefinition. This is where we find our native Kubernetes Deployment, Service and Ingress.

Once the my-application object is defined and applied to the cluster. It should be available to list with kubernetes get ResourceGraphDefinition -o wide.

Now a developer who in the previous example had to define all the low-level objects manually to deploy their web application. Can simply create an instance of the my-application object with a simple YAML file that looks like:

apiVersion: kro.run/v1alpha1

kind: Application

metadata:

name: my-application-instance

spec:

name: my-awesome-app

ingress:

enabled: true

Once this YAML is applied to the cluster we can query to see if the object has been applied properly and there are no errors.

➜ kubectl get Application

NAME STATE SYNCED AGE

my-application-instance ACTIVE True 3h

We can also see if the low-level resources defined in our ResourceGraphDefinition has been generated:

➜ kubectl get deploy,svc,ing

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/my-awesome-app 3/3 3 3 3h1m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP x.x.x.x <none> 443/TCP 3h15m

service/my-awesome-app-service ClusterIP x.x.x.x <none> 80/TCP 3h1m

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/my-awesome-app-ingress <none> * x.x.x.x 80 3h1m

The example above shows how resource grouping can be leveraged to manage Kubernetes objects easily. KRO has the advantage of being Cloud and Object agnostic, unlike Crossplane it can manage any Kubernetes object whether it’s native (aka part of the Kubernetes upstream API) or installed via other type of CRD’s.

Platform admins can also leverage KRO to manage Cloud Platform specific deployments using KRM. The major cloud providers provide their own set of CRD’s and controllers for this. For example ACK for AWS, KCC for Google Cloud, or ASO for Azure.

Managing Cloud Infrastructure with KRO

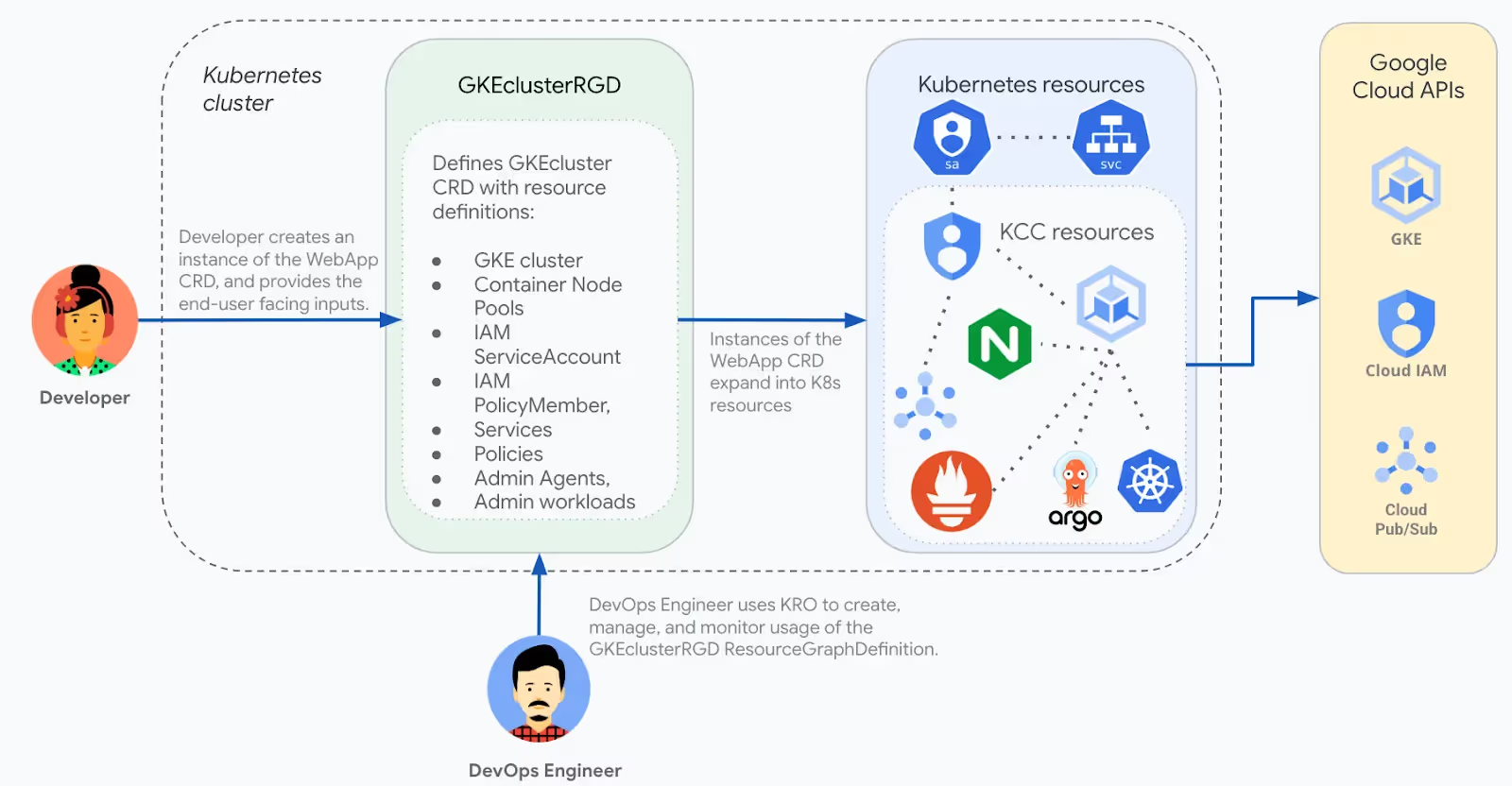

Let’s look at a specific example of Google Cloud. Assuming an admin want to leverage KRO to manage the lifecycle of GKE clusters and only expose to end users certain fields while hiding some other parameters for security, compliance or standardization purposes. A platform admin can create an RGD called GKEClusterRGD that allows a developer to provision a GKE cluster alongside nodepools, IAM policies. They can also make sure some admin workloads (security agents, logging and monitoring tools…) are installed by default and cannot be removed by the developer. In this case the admin can define the RGD like in this example (illustrated below).

A developer can then create an instance of the GKEClusterRGD and set some parameters to get a cluster provisioned. You will notice the difference between the full blown definition of the RGD and the instance that will actually provision the cluster. This is also one of the powers of KRO, simplification.

apiVersion: kro.run/v1alpha1

kind: GKECluster

metadata:

name: krodemo

namespace: config-connector

spec:

name: krodemo # Name used for all resources created as part of this RGD

location: us-central1 # Region where the GCP resources are created

maxnodes: 4 # Max scaling limit for the nodes in the new nodepool

Advanced examples:

So far we have seen an example of a simple web application grouping and infrastructure grouping. Can we combine the two? The answer is Yes. KRO can be used to define any type of grouping mixing both application specific resources and cloud specific resources. The example below shows can an Ollama deployment on Elastic Kubernetes Services (EKS) can be combined with Infrastructure components to simplify the deployment of LLMs.

The RGD definition exposes some settings like storage sizes, model parameters, resources requests and limits and accelerators configs. Notice that in the resources section there is a resource to managed a storage (which in this example leverages AWS EBS CSI Driver to provision the underlying disks) and Kubernetes standard resources like Deployments, Services and Ingress. Creating an instance of this Ollama deployment becomes a very short yaml file of ~30 lines.

apiVersion: kro.run/v1alpha1

kind: OllamaDeployment

metadata:

name: llm

spec:

name: llm

namespace: default

values:

storage: 50Gi

model:

name: llama3.2 # phi | deepseek

size: 1b

resources:

requests:

cpu: "2"

memory: "8Gi"

limits:

cpu: "4"

memory: "16Gi"

gpu:

enabled: false

count: 1

ingress:

enabled: false

port: 80

Conclusion:

In conclusion, KRO (Kubernetes Resource Orchestrator) is a Kubernetes-native, open-source project that offers a simplified approach to application deployment and management on Kubernetes. By enabling the creation of custom APIs, KRO allows platform teams to encapsulate the complexities of Kubernetes deployments, providing developers with higher-level interfaces tailored to their specific needs.

Please note that KRO is still in active development and should be considered an Alpha product. However, exploring the documentation and experimenting with the tool should give you some insight into the simplification the tool can bring into the Kubernetes world.