Platform engineering is the critical foundation for scaling generative AI and moving beyond MLOps bottlenecks to a robust, self-service paradigm for developers.

The generative AI revolution is not just about models; it's about deployment. Across every industry, organizations are racing to harness Large Language Models (LLMs), but a formidable barrier stands between prototype and production: the deployment bottleneck. Moving LLMs into live environments is notoriously complex, demanding a rare fusion of expertise in machine learning, DevOps, and cloud-native infrastructure. This operational hurdle leaves data science and development teams in a long queue for a specialized central team, stifling the very agility LLMs are meant to inspire.

Enter platform engineering. This strategic discipline is the key to unlocking enterprise-wide AI adoption. By building a standardized Internal Developer Platform (IDP), organizations can abstract away the complexities of LLM infrastructure, creating a "paved road" for developers and data scientists. This enables a true self-service model for deploying, managing, and scaling models securely and efficiently, transforming generative AI from a centralized specialty into a democratized capability.

The new frontier: Challenges in LLM operations

Deploying LLMs effectively involves far more than simply running a model, as each production launch without a platform-based approach metastasizes into a bespoke, high-friction project that demands a manual, cross-functional slog through provisioning scarce GPU infrastructure, configuring hyper-specific software drivers, optimizing performance with specialized inference servers, and engineering robust systems for auto-scaling, observability, and security.

- Infrastructure provisioning: Allocating and configuring GPUs, specialized hardware, networking, and storage is a manual, error-prone task that requires deep infrastructure expertise.

- Model packaging and versioning: Ensuring models are correctly containerized with all their dependencies, and that versions can be managed and rolled back, is critical for stability.

- Deployment automation: Orchestrating the entire deployment process, including scaling, health checks, and traffic management in a distributed environment like Kubernetes, is a significant engineering effort.

- Comprehensive observability: Gaining deep insights into model performance, token usage, inference latency, and resource utilization requires a complex, integrated monitoring stack.

- Security and access control: Implementing robust security measures for model endpoints and the underlying infrastructure is non-negotiable and often complex.

For Retrieval Augmented Generation (RAG) deployments, the complexity multiplies. This includes deploying and managing the lifecycle of vector databases, integrating with data sources, and ensuring efficient data synchronization and indexing. The orchestration layer, which manages the retrieval and generation process, must be robustly deployed and scaled alongside the model itself. Without a well-defined platform, these tasks create immense operational drag and hinder developer velocity.

The platform engineering solution: From friction to flow

Platform engineering transforms this complex landscape by creating a standardized, self-service environment that abstracts infrastructure complexity while maintaining operational control. The goal is to maximize developer velocity without sacrificing reliability. A well-designed LLM platform provides developers with intuitive interfaces and automated workflows, allowing them to focus on what they do best: building innovative applications.

A comprehensive LLM platform engineering strategy must deliver:

- Declarative model management: Developers should define their LLM requirements, model type, hardware needs, scaling policies, via simple configuration files or API calls, not by writing for instance complex Kubernetes manifests or Terraform code.

- Automated infrastructure orchestration: The platform must seamlessly handle the provisioning of compute resources, including GPU clusters, storage, and networking. It should automatically scale resources based on demand and optimize costs intelligently.

- Integrated monitoring and observability: The platform should provide out-of-the-box visibility into model performance, resource utilization, and system health, without requiring developers to become monitoring experts.

- Built-in governance and compliance: All deployments must automatically adhere to organizational policies for security, resource usage, and data governance, enforced by the platform.

- RAG lifecycle management: For RAG systems, the platform must extend these capabilities to include the automated provisioning, data ingestion, and synchronization of vector databases.

This platform-centric approach transforms LLM deployment from a complex, artisanal process into a streamlined, repeatable workflow that scales with the organization's ambitions.

How to build a production-ready LLM deployment stack

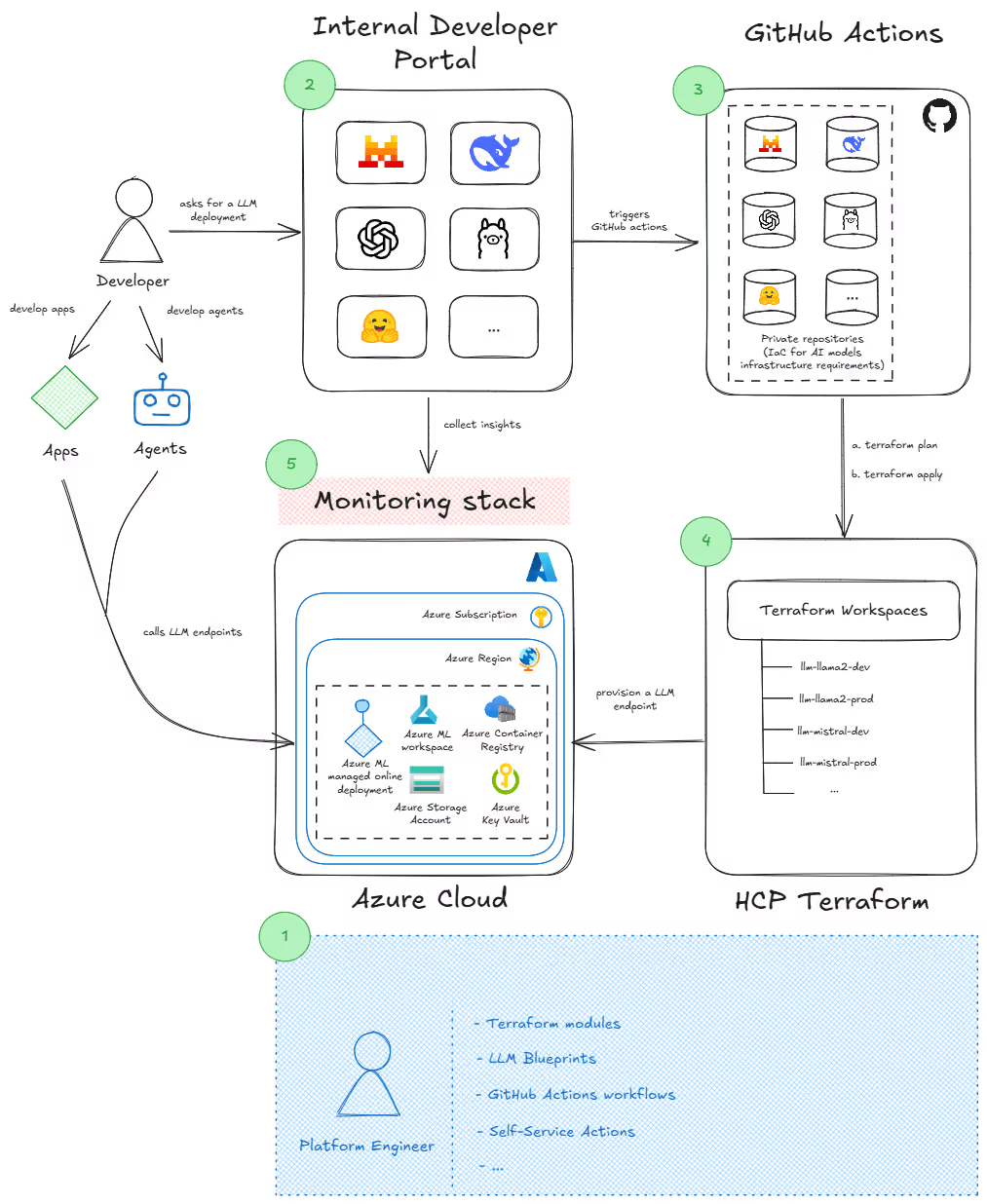

This solution leverages a powerful, modern combination of tools to achieve true self-service LLM deployment using three distinct, collaborative layers, creating a clear separation of concerns:

- The infrastructure foundation (HCP Terraform): At the base, platform engineers use HCP Terraform to define and govern the reusable infrastructure building blocks, like Kubernetes clusters, GPU node pools, and vector databases. This Infrastructure as Code approach is secured with HCP's policy enforcement and shared through a private registry, ensuring all infrastructure is standardized, compliant, and reliable.

- The automation engine (GitHub Actions): This layer serves as the engine for CI/CD. Triggered by a self-service request, a GitHub Actions workflow orchestrates the entire process: building the application container, securely authenticating with the cloud, and pushing the deployment directly to the infrastructure provisioned by Terraform.

- The developer experience layer (Port): Acting as the single pane of glass, Port provides the unified interface for developers. It abstracts away all underlying complexity, presenting a curated catalog of LLM templates. From here, developers can initiate the entire deployment workflow with a single click and monitor their application's status, creating a true self-service experience.

A self-service workflow in action

This schema describes how these tools integrate to create a seamless, self-service experience in deploying LLM on Microsoft Azure:

- Define golden paths (Platform Team):

- Platform engineers create version-controlled Terraform modules for the required infrastructure (e.g., an Kubernetes cluster with GPU nodes, AI services operated by a cloud provider).

- Within Port, they define "Blueprints" for LLM deployments. A blueprint is a schema for a service, like an "AI Model," defining its properties (model name, version) and other required inputs.

- For each blueprint, they configure a "Self-Service Action" in Port. This action is the developer's "Deploy" button, configured to trigger a specific GitHub Actions workflow.

- Developer self-service (Developer):

- A developer logs into the Port IDP and navigates to the software catalog.

- They select the desired LLM blueprint, for instance, "Deploy Mistral LLM."

- Clicking the "Deploy" action presents a simple form (generated by Port from the blueprint) where the developer provides parameters like their application's Git repository and the desired environment (e.g., staging).

- GitHub Actions orchestrates deployment:

- Submitting the form in Port triggers the pre-configured GitHub Actions workflow via a webhook. This workflow is the automated engine of the platform. It interacts with HCP Terraform to automate infrastructure provisioning needs.

- HCP Terraform governs infrastructure automation:

- When triggered by the GitHub Actions workflow, HCP Terraform acts as the secure and governed engine for infrastructure automation. The workflow initiates a run within a designated HCP Terraform workspace, which then executes the following:

- Plan & govern: It generates a Terraform plan based on the pre-defined modules. Before execution, this plan is automatically checked against governance policies defined using Sentinel or OPA. This critical step ensures all requested infrastructure complies with organizational standards for cost, security, and approved cloud services.

- Provision: Once the plan is validated, HCP Terraform securely provisions or modifies the necessary infrastructure in the target cloud environment.

- State management: Throughout the process, it securely manages the Terraform state file, providing a reliable source of truth and preventing configuration drift or conflicts from concurrent runs.

- When triggered by the GitHub Actions workflow, HCP Terraform acts as the secure and governed engine for infrastructure automation. The workflow initiates a run within a designated HCP Terraform workspace, which then executes the following:

- Unified monitoring and management:

- To close the feedback loop, the platform provides a unified view of operational health by integrating directly with the organization's central observability platform (e.g., Datadog, New Relic, Grafana). Rather than building brittle, one-off connections to every tool, Port leverages the rich, correlated data already present in your monitoring solution.

- An integration pipeline pulls comprehensive operational data, from deployment events logged by GitHub Actions to live performance metrics (like GPU utilization and inference latency) and resource health captured from the cloud infrastructure, into the Internal Developer Platform. This enriches the software catalog with real-time status, creating a holistic view of the deployed service.

The strategic benefits

Adopting this platform-driven approach yields transformative benefits:

- Accelerated developer velocity: By providing a self-service path to production, developers can deploy, iterate, and experiment with LLMs independently, drastically reducing time-to-market.

- Reduced operational overhead: Automating infrastructure and deployment workflows with Terraform and GitHub Actions minimizes manual effort, eliminates configuration drift, and frees the platform team to focus on higher-value work.

- Enhanced reliability and consistency: Infrastructure as Code combined with version-controlled automation workflows ensures that every deployment is consistent and reproducible. Deployment patterns are standardized, simplifying troubleshooting and ensuring best practices are followed universally.

- Strengthened governance and security: Port acts as a centralized control plane. By exposing curated blueprints and actions, the platform team ensures that only authorized developers can deploy approved model types to appropriate environments, with all necessary security policies enforced automatically by the deployment workflow.

- Centralized observability: The IDP becomes the single source of truth for all deployed LLM applications, giving developers and operations teams a unified view of status, performance, and health, leading to faster issue resolution.

Conclusion

The journey from a promising LLM prototype to a production-grade application has, for too long, been a path fraught with friction, technical silos, and frustrated ambition. The ad-hoc, bespoke deployments of the past created an operational bottleneck that stifled the very innovation generative AI promised to unleash. By strategically architecting a platform that combines infrastructure foundation, workflows automation, and internal developer portal , organizations can decisively dismantle this barrier and move at the speed of ideas.

This solution is more than a simple toolchain; it is a cohesive system where each component plays a critical, synergistic role. In our example, HCP Terraform provides the governed, reliable, and standardized "paved road," ensuring that all infrastructure is secure, compliant, and cost-effective by design. GitHub Actions serves as the tireless automation engine that travels this road, executing every deployment with perfect, auditable consistency. Finally, Port acts as the empowering interface, the "single pane of glass", that abstracts away the immense complexity of the road and the engine, allowing developers to deploy and manage powerful AI capabilities with unprecedented simplicity and autonomy.

Ultimately, the most profound impact of this model is human and cultural. It transforms the role of the platform team from gatekeepers of infrastructure to enablers of innovation. It elevates developers and data scientists from being passive requesters to active owners of their applications across the entire lifecycle. This fosters a culture of accountability, experimentation, and speed, dramatically shortening the cycle from concept to real-world impact. When the friction of deployment is removed, teams are free to ask "what if?" more often, testing new models and iterating on user feedback at a pace that was previously unimaginable.

As we move further into 2025, it is abundantly clear that the true differentiator in the AI race will not be access to models, but the organizational capability to deploy, manage, and scale them effectively. The principles of platform engineering are the key to unlocking that capability. Therefore, building a robust, self-service platform is no longer just a technical option; it is the core strategic imperative for any enterprise serious about harnessing the transformative power of generative AI to innovate, compete, and lead in its industry.