The cost of AI isolation

Platform engineering has established itself as the discipline that creates acceleration paths (golden paths) for development teams. However, many organizations treat AI capabilities as an afterthought, creating isolated AI silos. Just as data platforms once existed in complete isolation from the rest of the infrastructure, requiring separate governance, tools, and expertise, history is now repeating itself with AI initiatives.

The consequences of this isolation are significant. Development teams resort to shadow AI, introducing uncontrolled costs, security vulnerabilities, and compliance risks. Without platform-level integration, each team reinvents governance rules, struggles with model selection, and lacks visibility into AI spending. More importantly, the platform itself misses the opportunity to use AI to enhance developer experience and operational excellence. The challenge isn't whether to adopt AI, but how to integrate it seamlessly into the platform fabric while maintaining the principles of self-service, standardization, and security that define platform engineering.

1. Integrate AI coding assistants

The first and most immediate opportunity is to standardize how development teams interact with AI coding assistants. Rather than letting each team configure their own GitHub Copilot, Cursor, or Claude environments, provide pre-configured templates that directly integrate organizational knowledge into the AI context.

Consider this scenario: every service template in your platform catalog includes carefully crafted system prompts that understand your company's specific architectural decisions, development standards, and security requirements. When a developer uses an AI assistant to write code, it already knows that your organization uses specific error handling patterns, follows certain naming conventions, and must comply with particular regulatory requirements. This approach goes beyond simple development standards. By integrating approved MCP (Model Context Protocol) servers, you create a secure bridge between AI assistants, developer workstations, and your internal systems, eliminating the attack surface created by unverified third-party integrations (tool/supply chain attacks).

To implement this:

- Create platform templates that include .cursorrules, .github/copilot-instructions.md, .claude/* files, or similar configuration files.

- Pre-fill these files with agent-usable tools, security and development standards, and recommended integration patterns.

- Include your organization's Architecture Decision Records (ADR) in a standard format.

- Add well-written code examples from your own repositories.

This approach significantly reduces code review cycles and nearly eliminates security policy violations in AI-generated or AI-reviewed code.

2. Provide self-service AI agents in the platform catalog

Beyond assistants for producing code, accept that AI agents will gain prominence in modern architectures. This step provides AI execution capabilities to your organization. Just like you provide templates for microservices, databases, and message queues, project teams need templates to deploy AI agents with production-ready capabilities built in from day one.

These templates should simplify selection and abstract the deployment complexity of models while providing enterprise-level functionality:

- Pre-configured connections to approved models

- Automatic cost allocation to team budgets

- Built-in circuit breakers for anomaly detection

- LLM-specific security mechanisms (prompt guardrails)

You can integrate directly with SaaS models, with cloud AI platforms that act as gateways (such as AWS Bedrock, Google Vertex AI, and Azure OpenAI), or deploy models on self-managed GPU infrastructure; ideal for on-premises environments, data-sensitive scenarios, or cost optimization needs. Pre-established integration with tools like LiteLLM lets teams switch between different LLM providers with little or no code changes, while quota management prevents uncontrolled costs. Each agent deployed from these templates comes with standard observability designed for AI agents (for example, via OpenTelemetry), team-level cost tracking, and automatic compliance with data residency requirements.

For more complex multi-agent orchestration cases, dynamically rendered LangGraph or n8n resources let teams deploy sophisticated AI agents and AI-powered workflows in minutes rather than weeks, with complete confidence in security and cost control. The template should also include pre-built integrations with your observability stack, so that orchestrated AI agent behavior is as visible and debuggable as any other service on your platform.

Security considerations are critical. While MCP servers typically run locally on developer workstations, remote execution requires advanced security analysis to prevent supply chain attacks, robust authentication and authorization mechanisms, appropriate credential management and rotation, and regular security audits of model interactions.

3. Enhance platform operations with AI

The platform itself becomes smarter by incorporating AI into its core operations.

Production deployment reviews by Change Advisory Boards (CAB) represent a good example. Instead of relying solely on static rules and manual reviews, an AI-powered system analyzes the changes planned for a production deployment, understanding not only code changes but their potential impact on system behavior. AI can analyze Merge Requests covering Infrastructure as Code (for example, Terraform plans and Kubernetes manifests) together with application code changes to understand the full scope of the change and its potential blast radius. It automatically generates comprehensive risk assessments that highlight potential issues that static analysis might miss, such as subtle performance regressions or architectural drift.

To go further, once you train models with your organization's deployment history (including successful releases and post-mortems), such a system could automatically flag deployments with patterns similar to past incidents, generate detailed impact assessments that consider business context (such as avoiding risky changes during peak shopping periods), and suggest optimal deployment windows based on historical system load patterns. Keep in mind that this requires significant technical mastery of AI, costs, and quantities of available data.

4. Use AI for intelligent operations and incident response

Modern platforms generate overwhelming amounts of telemetry data—logs, metrics, traces, and events—that no human team can fully analyze in real-time.

Using machine learning models to identify subtle patterns that precede incidents isn't new. For almost 10 years, people have talked about AI for Ops that would automatically correlate seemingly unrelated events across distributed systems and suggest remediation steps based on historical resolutions. This goes beyond simple threshold-based alerting to understand complex interactions between services, infrastructure, and user behavior. However, these solutions are still rare. Only a small number of vendors have developed these capabilities based on their own models with built-in learning capabilities.

In contrast, using generative AI on ongoing incidents to provide operators with diagnostics and remediation suggestions is a breakthrough. Integration with specialized tools and operations-focused models can analyze logs, metrics, and events in natural language, making it easier for on-call engineers to query system state during incident response situations, which are typically stressful. Since you're dealing with production systems, watch out for:

- Integrations (MCP servers) granted to the generative AI model, which can be entry points for attackers. Validate and harden these servers.

- Potential hallucinations that can lead to unintended destructive actions (for example, data or resource deletion). Use a semi-assisted AI approach where an operator maintains control over actions.

5. Add conversational platform interfaces

The final transformation involves reimagining how users interact with the platform itself. While APIs and web interfaces like Backstage remain essential, adding a conversational AI layer democratizes platform access and enables complex multi-tool workflows that would be too complex via traditional interfaces.

This conversational interface doesn't replace existing tools but orchestrates them intelligently. When a CTO asks "What is our current cloud spending trend and which teams are driving the increase?", the system triggers a complex workflow that queries cost management APIs, correlates team ownership data, analyzes deployment patterns, and generates an executive-ready visualization, all via a natural language interaction.

To build this capability, create an AI agent with deep understanding of your platform's APIs, integrated with collaboration tools like Slack and Microsoft Teams. Platform users can then:

- Request infrastructure provisioning: "I need a staging environment for the payment service with yesterday's production data"

- Query system state: "Show me all services that haven't been deployed in 30 days"

- Get compliance reports: "Generate a GDPR audit report for customer data access in Q3"

All of this works through conversational interfaces that handle the complexity of orchestrating multiple platform tools.

Build your platform and AI strategy: a product-oriented approach

Integrating AI into platform engineering isn't optional, it's necessary. Organizations that fail to provide structured AI capabilities through their platforms face the same challenges that led to the creation of platform engineering in the first place: shadow IT, inconsistent practices, security vulnerabilities, and inability to scale.

However, each of these five aspects requires significant engineering investment, dedicated resources, and organizational change management. This is where the fundamental principle of platform engineering - treating the platform as a product - becomes crucial. Prioritization is essential, guided by measurable value relative to implementation cost. Adopt a product mindset and identify specific metrics that demonstrate the value of each AI capability. For example:

- Reduced average Merge Request time for AI coding assistants

- Decreased production incident resolution time for AI-enhanced operations

- Reduced platform support tickets for conversational interfaces

Link each metric directly to business outcomes that matter to stakeholders: faster time to market, improved system reliability, or reduced operational costs.

Where should you start?

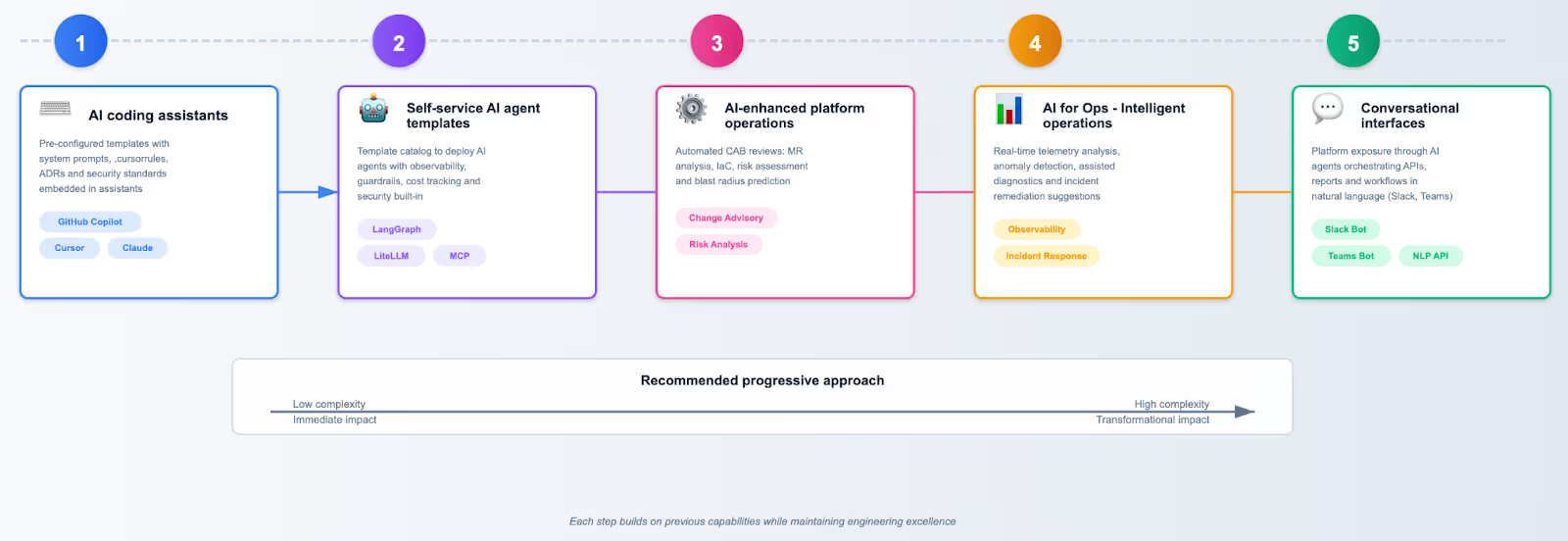

The implementation sequence matters. Most organizations find success by starting with AI development assistants because they provide immediate and measurable developer productivity gains with relatively low implementation complexity. The governance patterns you establish here (prompt engineering standards and security controls) create the foundation for subsequent AI capabilities. From there, the journey typically progresses to AI agent templates (extending the governance model), then to operational AI (using established AI infrastructure), and finally to conversational interfaces (building on all previous capabilities).

Platform engineering succeeds through iterative improvement and continuous feedback loops. Treat each AI capability as a minimum viable product. Collect metrics and make iterative adjustments based on actual usage and value delivery. Establish clear success criteria before implementation. For example, if AI coding assistants don't reduce PR cycle time by at least 20% within three months, investigate the reasons before moving to the next aspect. If AI agent templates aren't adopted by at least 50% of teams within six months, understand the obstacles before expanding further.

Platforms that successfully integrate AI won't just be more efficient, they'll enable entirely new capabilities. The question for every CTO and platform leader isn't whether to integrate AI into their platform, but how quickly they can do so while maintaining the engineering excellence their organizations depend on.