This article is based on a recent talk I gave on AI-powered migrations. You can watch the full presentation here for more technical details and demonstrations.

Recently I gave a talk titled ‘Is the $2 billion migration consulting industry over?’ To summarize in short the short answer is: not exactly. After analyzing public case studies from companies like Slack, Google, Airbnb, and customers at Ona I’m of the belief: AI agents are now capable of handling large-scale migrations in ways that weren't possible 6-12 months ago. The blog and talk I’ve written to provide some commentary on what I'm seeing across the industry.

Here’s the thing: migrations have always been pretty brutal, I know because I've led them before. You add Jira tickets to team backlogs, send email chases constantly but because teams have product managers and KPIs and roadmaps, very little will budge for central standardization goals. Sure, a few teams might get excited because they see a reason to migrate to a new tool, pattern or library, there's always a long tail of teams who simply aren't incentivized and end up frustrated that something familiar is being replaced. Their current setup works, so why add risk?

In reality, most migrations are hard, slow, not a lot of fun and pretty thankless.

In AI, something shifted in 2024: context windows grew, tool use capabilities emerged, letting agents take autonomous actions like running CLIs, editing files, and checking test results so they can now operate independently. As a result, companies like Slack started to post success stories of large-scale migrations like automating 15,000 test files, and AirBnB completing 1.5 years of work in 6 weeks. My bet is that this migration technology will spread across the industry and become as ubiquitous as CI/CD where we just ‘expect it’ to be in place.

As I’ll showcase today with case studies: the question isn't whether AI can do large-scale migrations but: which types of migrations work, and when should you invest?

What are the different types of migration?

Everyone has a different definition of "migration." For the purpose of today's conversation, let me clarify what I mean. Migrations can include:

- Library upgrades (Enzyme to React Testing Library, as we'll see)

- Language version bumps (Python 2 to 3, Java 8 to 17)

- Security vulnerability remediation (CVE patches across repositories)

- API changes (deprecated methods to new APIs)

- Framework migrations (Angular to React, though more complex)

- Architectural standardization (enforcing ADRs and RFCs across codebases)

- Migration from legacy languages (COBOL to modern languages)

- Cloud modernization (lift and shift migrations)

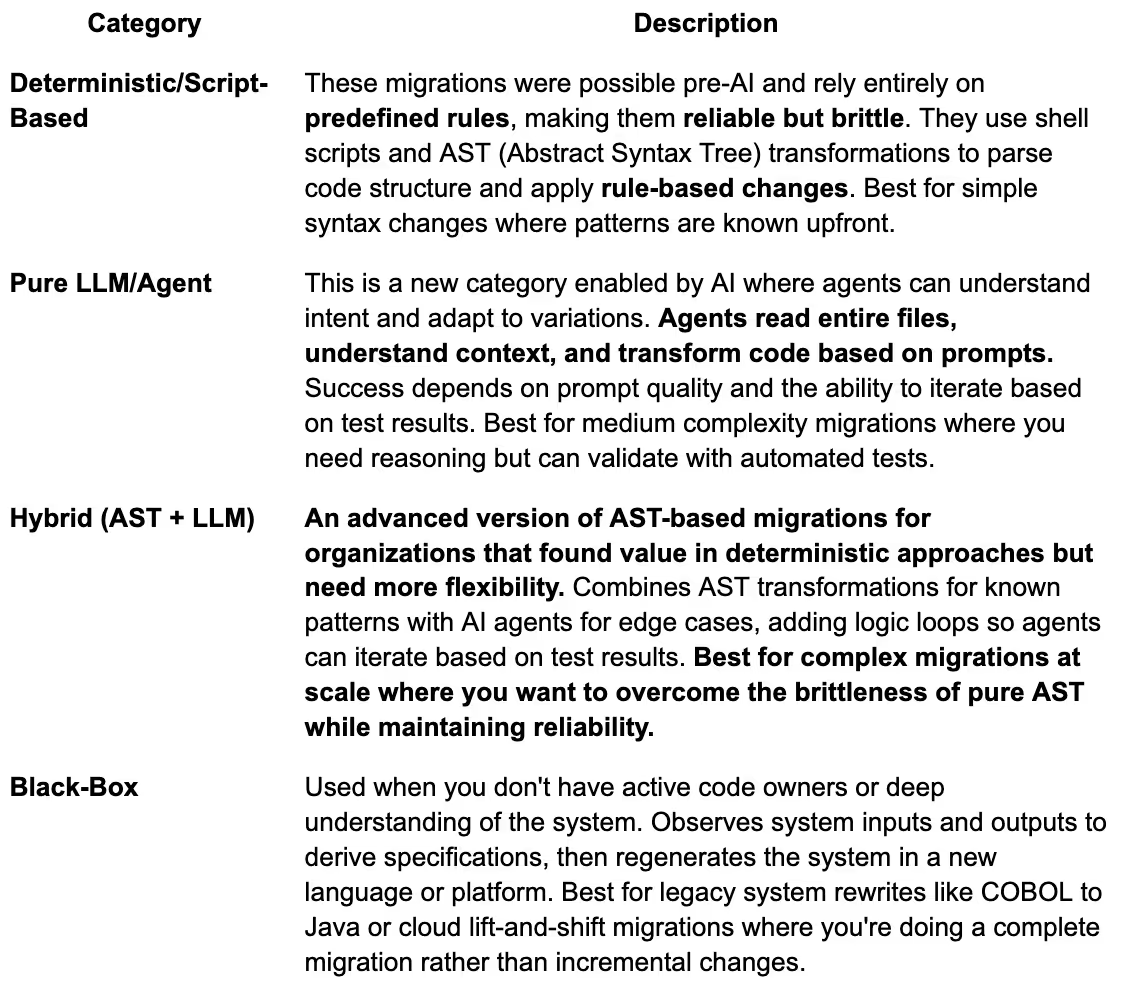

By looking at these different use cases and the customers we'll explore later in this post, I've started to see some emerging patterns and categories. Let me break those down for you in this table, and then we'll run through the case studies and talk more about how these categories play out in practice:

Now let's see how this works in practice with real examples.

Slack: AST + LLM hybrid approach for Enzyme to React testing library migration

Interestingly, both Slack and Airbnb tackled the exact same migration, and their approaches reveal a lot about what works. Slack needed to migrate over 15,000 test files from Enzyme to React Testing Library. But, here’s what I found most interesting: they tried the pure AST approach first, but it couldn't handle all the edge cases. Then they tried pure LLM, but success rates were inconsistent, hovering around 40-60%. What worked in the end was combining both.

Obviously, Slack is a good example that these migrations can work at scale. But the key learning I took away was that context engineering drove success more than prompt refinement. This is interesting because we hear a lot about prompt engineering these days, but actually just making sure the agent had the right context was clearly the most important thing for Slack's success. Specifically, they fed the LLM around 40-50 example test files, component source code, and documentation. What's nice is that their implementation is open source, so you can read through their approach and learn from it.

Read the full Slack case study

Airbnb: State machine approach using pure LLM to migrate 3,500 test files in 6 weeks

Airbnb faced 3,500 test files to migrate and estimated 1.5 years manually. They built a state machine where each file moved through discrete validation gates: Enzyme refactor, Jest fixes, lint checks, TypeScript validation. The key was that files only advanced when validation passed at each stage. The breakthrough was their automated retry loops. When a step failed, the system fed error messages back to the LLM with up to 100,000 tokens of context, including sibling test files and component source, then automatically retried the transformation. This pattern of validate-fail-retry with rich error context brought high success-rates.

What's remarkable about this is they were able to achieve 97% automation, with the remaining 3% requiring manual completion using the AI-generated code as a baseline. This further corroborates that large-scale migrations are genuinely possible today. What I learned from Airbnb's example echoes what we saw with Slack: they didn't perfect prompts upfront, they perfected the feedback loop. Giving the agent concrete error messages and rich context to iterate on was what made this work.

Read the full Airbnb case study

Google: Fine-tuned model for 32-bit to 64-bit Integer migration

Google migrated from 32-bit to 64-bit integers by fine-tuning Gemini for this specific pattern. Here's the reality: you probably don't need to fine-tune your own model. Frontier models like Claude or GPT-4 work well off the shelf for most migrations. Google has unique resources, custom infrastructure, and ML expertise that justify this investment. I include it here to show the full spectrum and to manage expectations. Don't invest in fine-tuning unless you have very specific patterns that frontier models can't handle.

What's common across all these use cases

After looking at these case studies and talking with customers doing similar work, I've noticed some consistent patterns that are worth calling out.

The 80% Rule: every migration hits a ceiling, and that's okay. Every case study hit around 80% automation. Slack achieved 80% on their hybrid approach. Airbnb reached 97% on the first automated pass, but that remaining 3% still needed human baseline and manual completion. As Airbnb put it in their write-up, "the initial automated pass migrated 75% of files in under four hours, but around 900 files remained." The last 20% is exponentially harder to automate, and honestly, you shouldn't try. The edge cases are the most complex cases requiring business context, judgment calls, or understanding something unique about your codebase.

Rich context beats perfect prompts. This might be the most counterintuitive finding because everyone talks about prompt engineering. However, both Slack and Airbnb emphasized that getting the right context to the agent mattered far more than perfecting prompts. Slack fed the LLM around 40-50 example test files, component source code, and documentation. Airbnb went even further, providing up to 100,000 tokens of context for complex files, including sibling test files, examples from the codebase, and the full component source. The pattern is clear: throw rich, relevant context at the problem rather than endlessly tuning prompts. If your migration success rates are low, ask yourself whether the agent has enough examples and context to understand the patterns, not whether your prompt needs more refinement.

Sequential validation with automated retry loops is the pattern. Both successful examples broke migrations into discrete steps with validation gates. Slack ran: convert → test → lint → TypeScript compilation. Airbnb used: Enzyme refactor → Jest fixes → lint checks → TypeScript validation. Files only advance when validation passes. When a step fails, feed the error message back to the agent and retry automatically. This validate-fail-retry pattern with concrete error feedback is what enables high success rates. It's not magic, it's systematic iteration.

Human review is mandatory, and vibe coding doesn't work here. I see conversations online and in person sometimes where people think AI can magically update or migrate codebases without human review. That's a misconception I want to address directly. To my knowledge, I haven't seen anyone successful with that approach. Vibe coding itself has yet to be proven as a useful pattern outside of prototyping and first-draft iterations for code changes. Larger, more complex changes from agents still need to be reviewed by humans. The code you're changing must have someone who can review and understand what the agent did. There is no version of large-scale migration I'm seeing that works on abandoned codebases. You need active code owners. That's okay because we can still get significant value from this, but you can't just vibe code the changes and smash merge.

The burden of effort shifts in interesting ways. The investigation phase shrinks dramatically because agents can parse entire codebases instantly. Think about pre-AI migrations: if you're migrating from GitLab to GitHub, developers need to understand GitHub's CI syntax, read documentation, figure out best practices, and ask questions. That creates inertia. With agents, you can skip those questions by providing a first draft. The agent can raise a PR with best practices already applied and can comment explaining, "I've done this for this reason," educating through examples rather than forcing busy developers to read documentation.

The development phase shrinks too because the transformation is automated. However, the testing and verification phase doesn't shrink. This is critical and catches people off guard. The first time a developer sees this work is no longer an email or confluence document explaining the migration. It's a pull request. That can be fatiguing if not done thoughtfully. As Google noted in their write-up, their "toolkit also aims to assist engineers and let them focus on complex aspects, but without isolating them from the process." It's really important to use that PR as an education opportunity. The commit history should tell a story explaining how the agent did what it did. Ideally, developers can view the agent's thinking, see the conversation it had, or understand the workflow prompts. All of that context makes review manageable rather than mysterious.

Should you invest in large-scale migrations with AI today?

While not every organization should invest in agent-powered migrations today, if you're at a certain scale, the opportunity is significant. These migrations really start to work when you exceed about 100-500 engineers and have enough repositories to justify the setup investment. In the talk, I walked through an ROI framework with a graph showing when you hit break-even. I don't have space to cover that in detail here, but if it's something you're interested in, let me know and I'll potentially write a follow-up post. Pragmatically, one of the best ways to figure out your ROI is simple: run a migration on a single repository, calculate how long it takes, and extrapolate from that to understand your potential savings.

If you start getting value from local approaches using tools like Cursor or Claude Code on your machine, the next step is to think about scale. This is where orchestrated approaches come in. Platforms like Ona can run these migrations in the background at scale, coordinating hundreds of parallel operations across your repositories. At Ona, we're an AI software engineer platform built specifically for organizations like Bank of New York Mellon and Hargreaves Lansdown, where security and privacy are paramount. Everything runs in your VPC, and we handle the orchestration complexity so you can focus on defining migration strategies. If you'd like to learn more, check out our automation capabilities or get a demo to see how this works in practice.

The Future is Bright for High Agency Platform Teams

Here's what strikes me about this moment: the drudgery of large-scale migrations is being significantly lifted by AI. For platform teams who might have felt anxious about what AI means for their role, being able to orchestrate large-scale migrations with agents puts power back in your corner. Platform teams with high agency can now have a significant impact across their organizations in ways that simply weren't possible before.

Platform teams will need to reinvent themselves somewhat. Platform as a product, focusing on the right problems, understanding your users—these principles are more important than ever. Our tools are more powerful than ever, which means directing them toward the right problems is now critical. The world is moving faster. Leadership expects more. We need to leverage these tools to deliver at a pace and scale that matches what's now possible.

This fundamentally changes what's feasible. You can tackle technical debt that's been sitting in the backlog for years. You can modernize systems without consuming months of developer time. You can standardize practices across hundreds of repositories. Migrations that we previously wouldn't have considered are now viable because the investment threshold is way lower. The only way to get started is to play around and explore these capabilities. Start to understand what they're good at and what they're not good at through hands-on experimentation.

To loop back to the original question about the $2 billion migration consulting industry: is it over? I don't think so. Manual review is more important than ever with AI-related transformations, so there will still be a need for humans in the loop. But I would expect most companies to start building their own capabilities to run these large-scale migrations, which will eat into what migration consultants were doing before. Consultants will shift to different types of migrations, like the black-box migrations we discussed: modernization from COBOL, cloud lift-and-shift, and other scenarios where you don't have developers to review pull requests. That's where a lot of the consulting industry will likely end up, because hybrid and pure LLM migrations can now be brought in-house.

Large-scale migrations with AI agents are ready today. Platform teams are at the center of this transformation. The question isn't whether this is possible anymore. The question is: which migrations are you going to tackle first?

Want to dive deeper into the technical details? Watch the full talk here.