This article is based on my presentation at NVIDIA GTC 2025, where I discussed how platform engineering teams can accelerate AI-native application development and deployment at scale.

In 2025, enterprises are moving faster than ever to deploy AI models into production. From healthcare and manufacturing to financial services and telecom, organizations are transforming every line of business with intelligent systems and AI-native applications. Behind this acceleration, ITOps, DevOps, and CloudOps teams are reorganizing, with a new emphasis on internal platform engineering. Why? Delivering innovation at AI scale demands more than infrastructure. It requires infrastructure that’s composable, orchestrated, hybrid-ready, and built to serve developers - not slow them down.

Over the past two decades, enterprise architecture has evolved through 10-year transformations, from the web-native era to cloud-native development and now into the AI-native phase. This latest shift is defined by containerized models, real-time inference, and globally orchestrated GPU infrastructure. As with previous waves, success in the AI-native era depends on adopting composability, scalability, and automation as core design principles.

This article offers a practical walkthrough of how platform engineering teams can help build and scale AI-native apps with pre-composed infrastructure templates - leveraging Vultr, NVIDIA NIMs, and Run:AI to deliver production-ready results faster than ever.

Platform engineering: The foundation for AI-native development

Many organizations are expanding their internal platform engineering teams to meet the pace and complexity of AI-native application development. These teams act as centers of excellence, building and maintaining infrastructure as composable, reusable artifacts.

Platform engineering teams treat infrastructure as version-controlled, containerized building blocks that can be accessed via internal developer platforms (IDPs) or APIs. These components - covering compute, storage, networking, and services - are treated as products. They’re governed and maintained with service-level agreements (SLAs), and include built-in policies for security and compliance. This product mindset allows teams to deliver infrastructure that's not only scalable, but also predictable and safe to use across the organization.

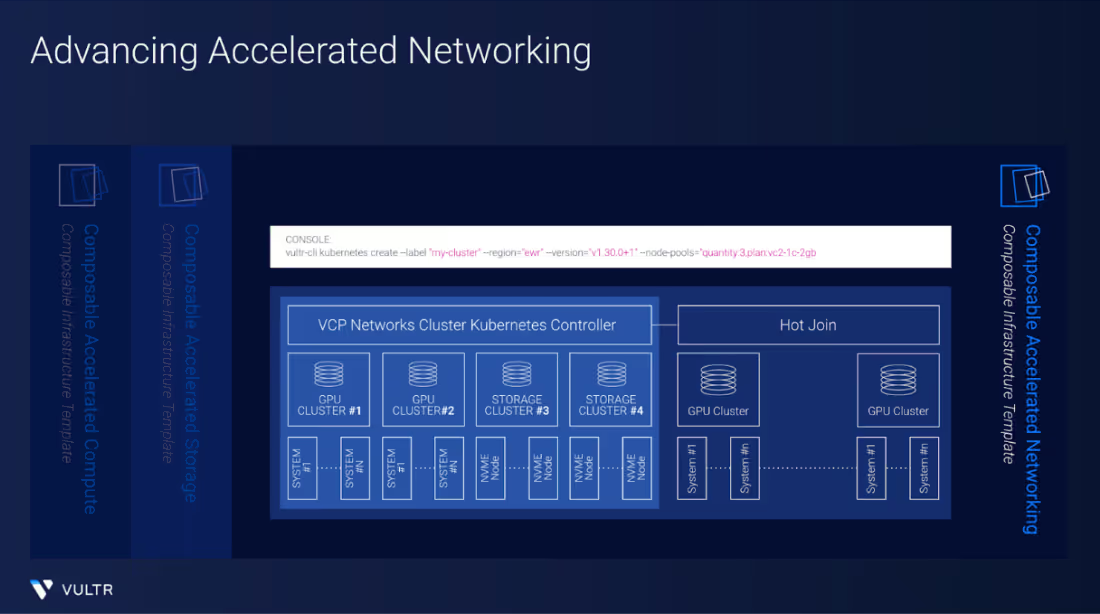

A critical part of this strategy is the creation of "golden paths" for AI development: standardized infrastructure-as-code templates that simplify the process of setting up and scaling GPU environments. These templates handle the heavy lifting of provisioning, scheduling, and configuring GPU resources, allowing engineers to focus on model development instead of backend setup. Integrated policy controls ensure security and compliance are enforced by default. These templates are exposed in internal developer portals to provide fast, safe, and repeatable self-service.

These practices empower developers to move quickly within safe boundaries while allowing platform teams to maintain consistency and reliability across all AI workloads.

The hybrid cloud architecture behind AI-native infrastructure

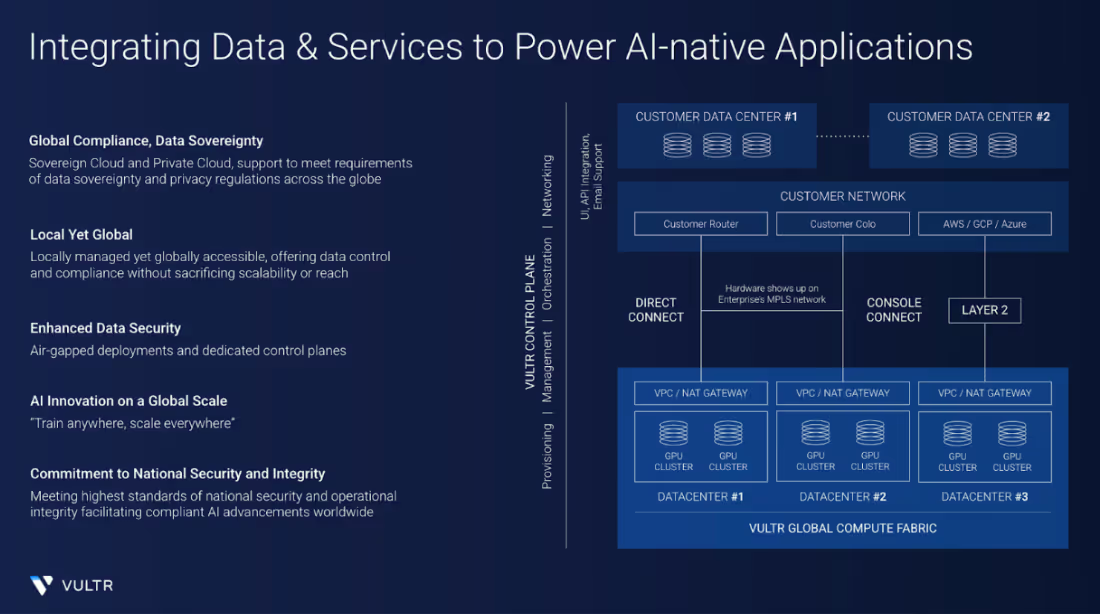

While golden paths and pre-composed templates simplify how developers package and consume infrastructure, the underlying architecture must also support global scalability and secure data movement. AI-native applications rely on infrastructure that spans public cloud, on-prem systems, and edge environments. That’s why platform engineering teams must go beyond abstraction and take ownership of the hybrid, multi-cloud architecture that ties everything together.

At Vultr, hybrid cloud capabilities are built-in. Enterprises can connect on-premises data centers directly to cloud environments using secure, private networking channels - eliminating exposure to the public internet. Operational data can be streamed into GPU clusters, inference engines, or vector databases through reusable and secure networking modules.

This infrastructure model includes:

- Direct integration with AWS, Azure, and Google Cloud via Vultr Direct Connect, that can be expanded by Console Connect, enabling self-service Layer 2 or Layer 3 connections between Vultr and other cloud providers.

- Private access to Vultr cloud servers from over 900 global data centers and enterprise sites using Console Connect’s Edge Port service.

- Edge-ready compute with Vultr’s global footprint of 32+ cloud data center regions, growing edge presence, and 5G access—bringing low-latency GPU and compute power closer to where data is generated and processed.

- Composable infrastructure through an API-first approach that supports infrastructure as code via Terraform, seamless containerization, and the flexibility to integrate the tools and services that teams already use in their AI and cloud-native stacks. This includes orchestration with the Vultr Kubernetes Engine, enabling platform teams to easily deploy and manage containerized AI workloads across hybrid environments.

These components work together to support secure, scalable, and distributed AI workloads. Whether data originates in a hospital server, a field sensor, or an enterprise application, Vultr’s composable architecture ensures it reaches the right compute resource reliably and with low latency.

Declarative APIs and policy-based controls give platform teams the tools they need to manage multi-tenant environments, enforce deployment standards, and integrate with developer platforms and CI/CD pipelines. With the hybrid foundation and orchestration layer in place, AI infrastructure becomes globally available and operationally seamless.

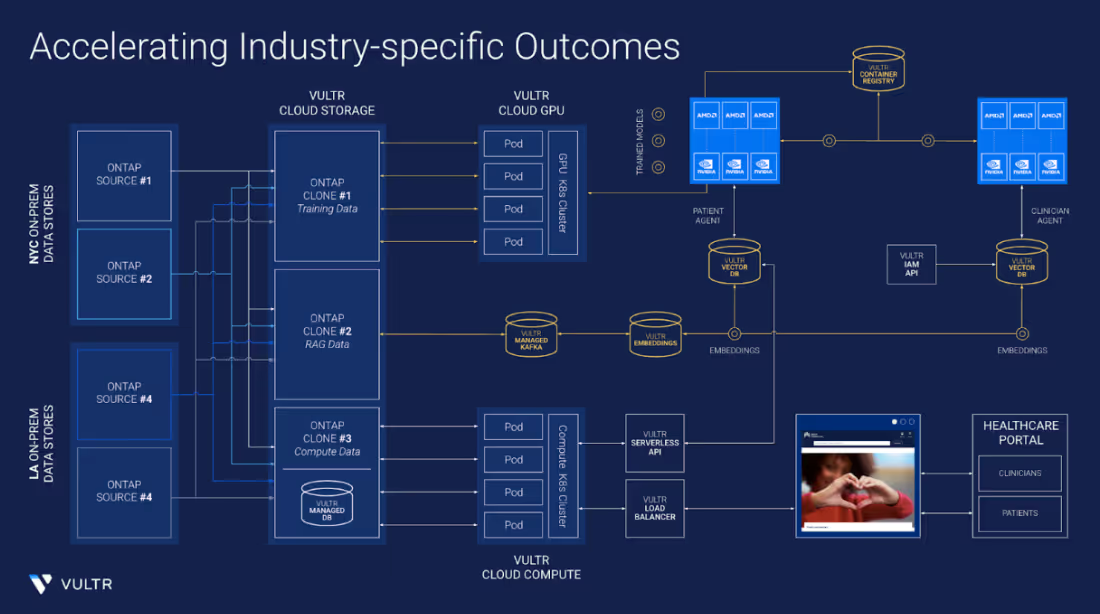

Putting it into practice: Templatized AI agents in healthcare

Let’s look at how this all comes together in a real-world example. Imagine a platform engineering team standing up AI-native agents for a healthcare provider. Using pre-composed infrastructure-as-code templates, they deploy:

- GPU clusters (e.g., B200 or H100 or MI325X)

- Managed storage and vector databases

- Kafka pipelines for streaming

- Hybrid cloud connectivity to medical records systems

This stack supports patient- and clinician-facing AI agents on the same core infrastructure. For a patient, an agent might respond to a question like, "What are my latest lab results?" by retrieving data from a vector store and generating a simple explanation: "Your results look good, but glucose levels are slightly elevated."

The response is more contextualized for a clinician viewing the same patient’s record: "Glucose levels remain high. Recommend a follow-up in 3 months. Consider reinforcing dietary changes."

All of this is made possible by infrastructure templates that are version-controlled, centrally governed, and automatically provisioned using tools like Run:AI. Run:AI handles the orchestration layer, ensuring GPU workloads are scheduled efficiently across clusters, even in multi-tenant environments - so AI engineers can train and deploy models without managing compute allocation. The same pattern can be used for other industries or use cases - customer support, field operations, internal tooling - by branching and customizing the core templates.

Templates are surfaced in the internal developer platform, where teams can deploy full AI environments in just a few clicks or through Git-based workflows. The result is faster delivery, higher consistency, and freedom for developers to focus on innovation rather than infrastructure setup.

Completing the shift to AI-native architecture

To complete the transition into the AI-native era, enterprises must embrace a platform engineering approach, which will enable them to operate globally confidently and quickly.

This marks the latest stage in a long arc of enterprise transformation. Just as the web-native and cloud-native eras demanded new ways of thinking about infrastructure, the AI-native era requires platform teams to redefine how infrastructure is designed, deployed, and delivered. By turning infrastructure into reusable, governed product artifacts, platform engineering teams enable AI development to scale safely, repeatably, and with minimal friction, transforming infrastructure from a bottleneck into a force multiplier.

Vultr, in partnership with NVIDIA and Run:AI, is enabling this new reality by offering GPU-ready infrastructure that deploys in minutes, scales globally out of the box, and eliminates the heavy lifting typically required to support production AI workloads. With additional support for AMD GPUs, including the Instinct™ MI325X, organizations gain even greater flexibility to build and scale diverse AI applications on their terms.

This is the next great infrastructure wave, and platform engineers are the ones making it real. Let’s build it.

Want more? Watch my webinar Build AI-native infrastructure with GPUs and platform engineering.

This article was sponsored by VULTR for PlatformCon 2025.