At Cloudsmith, we recently explored the Kubernetes 1.34 release notes in depth. This post highlights a more focused aspect: the brand-new Alpha features introduced in this version. These Alpha features are early-stage, disabled by default, and not recommended for production environments, but they certainly give a strong sense of Kubernetes' evolving direction and future capabilities.

Many people in the Kubernetes community, specifically working with AI workings running on Kubernetes, would be excited to see #4381 DRA: Structured Parameters graduating to Stable in the “sig-node” feature group. With the graduation of Dynamic Resource Allocation (DRA) to stable, Kubernetes now has a more flexible way to handle specialised devices for Kubernetes, like GPUs or AI chips that often meant custom scripting or complex setup.

Now, Kubernetes can automatically allocate and prepare these devices for you, just like it already does with storage or CPUs. Now in Kubernetes v.1.34 you can use part of a GPU (like NVIDIA MIG) instead of wasting a whole one on a small job. Admins can now define device types and rules like, "this is a GPU with X specs" in a central place, and developers can then just say "I need a GPU of type X" and Kubernetes takes care of the rest, including picking a node that has one.

DRA advancements in v.1.34

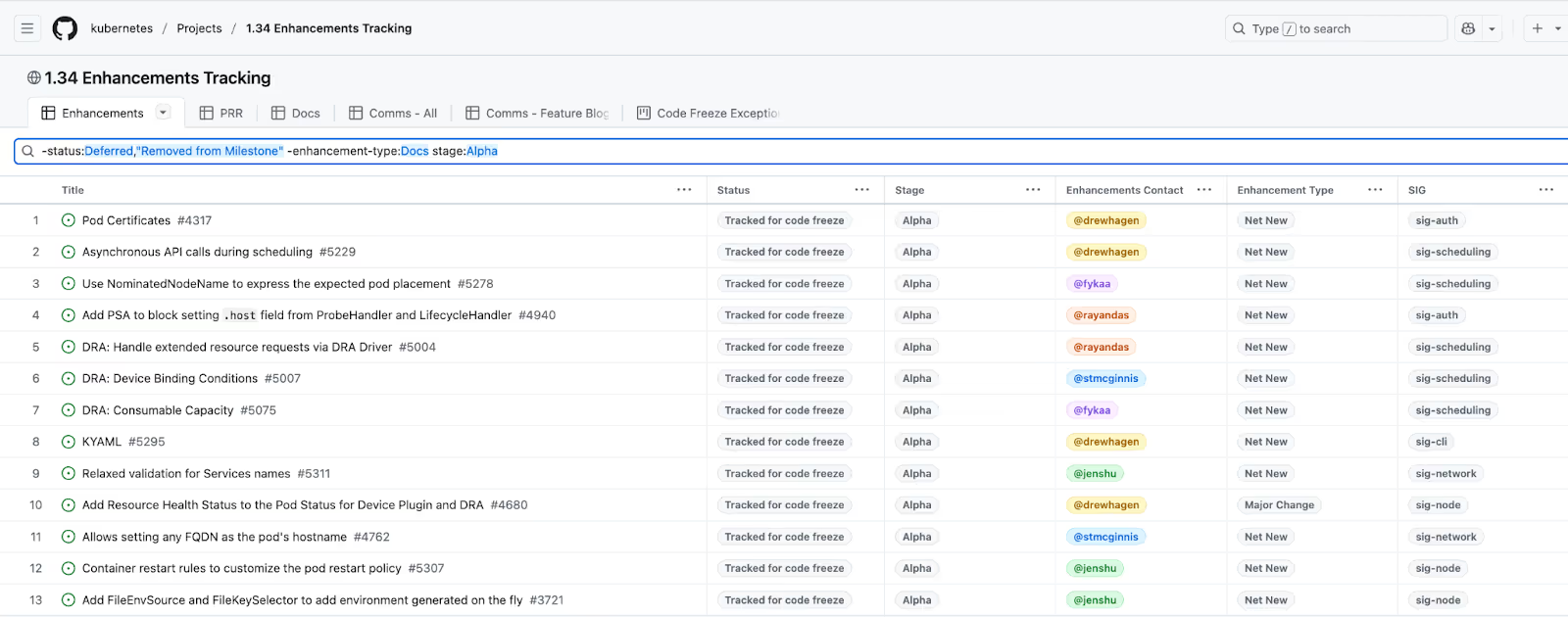

Other than the above-mentioned DRA structured parameters graduating to “Stable”, there were 3 Net New to Alpha stage additions in v.1.34 related to DRA.

Firstly, DRA advancements to handle extended resource requests via a dedicated DRA Driver (Enhancement #5004). This improves support for handling specialised hardware via a focused DRA driver.

Secondly, we see the introduction of DRA Device Binding Conditions (Enhancement #5007). This prioritises devices based on Readiness. For example, when multiple candidate devices are available, the scheduler should prefer devices in the following order:

- Devices without any BindingConditions (i.e., immediately usable)

- Devices with BindingConditions (i.e., require preparation)

Thirdly, DRA improvements around consumable Capacity (Enhancement #5075). This introduces an ability to allocate a shareable device via DRA multiple times in scenarios where pre-defined partitions are not viable, for example because there would be too many of them. The DRA driver declares which device-level resource it can guarantee or reserve to a specific request and what are valid values that can be reserved. It also lets users specify in device requests how much of certain device resources they require.

To round it off, there's a fourth DRA-related Alpha feature - Resource Health Status for Devices (Enhancement #5310). This surfaces health information for devices in the Pod Status, helping Kubernetes natively detect and respond to failing or degraded devices, which is especially useful in GPU-heavy environments.

KYAML: A friendlier approach to YAML formatting

Enhancement #5295

The vast majority of examples of how to use Kubernetes include YAML. These all use the most "conventional" YAML syntax, which is what kubectl get -o yaml also uses. Simple YAML is easy to read, but complex YAML can become very tricky. Some of the things that motivated this KEP are:

This enhancement aims to address one of the long-standing challenges in Kubernetes: YAML complexity. While simple YAML is readable, deeply nested configurations are prone to indentation errors and other formatting issues, especially when templating tools like Helm are involved.

This enhancement introduces:

- A formal YAML dialect fully compatible with existing parsers.

- Tooling and libraries for conversion and formatting.

- A kubectl output format that uses the KYAML style.

- Updated docs and examples across the Kubernetes project.

The goal is clearer, less error-prone YAML that maintains backwards compatibility.

Pod-level certificates made easy

Enhancement #4317

While Kubernetes has long supported certificate issuance, delivering these certificates securely and automatically to Pods has remained cumbersome. Enter PodCertificateRequest (#4317), a new Alpha API that simplifies this.

This enhancement introduces:

- PodCertificateRequest API: A new, scoped resource that lets Pods request certificates in a controlled way.

- Projected Volume Type: Allows the kubelet to handle provisioning and delivery of X.509 certificates directly into Pods.

Together, these features streamline secure certificate delivery without burdening developers with manual configuration.

Scheduling overhaul: Async API calls

Enhancement #5229

This alpha feature rethinks how Kubernetes handles API interactions in the scheduling cycle. While some parts of scheduling (like binding) are already asynchronous, this enhancement proposes a unified framework that allows all API calls to be handled non-blocking.

The benefits include faster scheduling performance, better control over goroutine creation, as well as extensibility for future use cases. This could be especially beneficial for high-throughput clusters or environments running large-scale batch jobs.

Enhancing pod placement with NominatedNodeName

Enhancement #5278

Using NominatedNodeName to Express Expected Pod Placement extends the role of this field beyond just preemption hints. It enables components such as the scheduler and external controllers to express pod placement preferences explicitly.

Use cases include retaining scheduling decisions across scheduler restarts, enabling PreBind plugins to resume operations seamlessly, and also gives external components a way to signal preferred nodes for scheduling. This new feature strengthens coordination between different parts of the scheduling pipeline and improves resilience in complex environments.

Hardening Probes improves overall security

Enhancement: #4940

Kubernetes previously allowed users to set arbitrary host fields in probe handlers (like HTTPGetAction), potentially opening the door to SSRF (Server Side Request Forgery) attacks. PSA Blocking for Probe Host Field aims to prevent misuse by adding Pod Security Admission rules to restrict the host field, while updating the baseline Pod Security Standard to enforce this restriction by default, which ensures safer defaults while still allowing flexibility for trusted workloads.

Relaxed validation for Services names

Enhancement: #5311

By relaxing validation for Service names, this enhancement is aligning the Service name rules with other Kubernetes resources. Previously, Service names had stricter constraints (for example they must start with a letter). With this change Service names can start with digits, validation becomes consistent across APIs, and ultimately internal Kubernetes code gets simplified. This small change has big usability benefits for naming conventions in real-world deployments.

Full control over pod hostnames

Enhancement: #4762

Some legacy applications rely heavily on specific hostnames, including Fully Qualified Domain Names (FQDNs). Kubernetes typically appends a cluster domain suffix, making migration tricky.

Setting an arbitrary FQDN as a pod hostname should enable pods to use any user-defined FQDN as their hostname, as well as allow DevOps teams to write this FQDN to /etc/hosts inside the pod. I’m excited to see this feature graduate to stable in later versions of Kubernetes as it makes Kubernetes friendlier to traditional workloads expecting DNS-based hostname validation.

Smarter container restart policies

Enhancement: #5307

This Alpha feature introduces container restart rules, enabling the kubelet to restart containers in-place based on specific exit codes, even when the Pod’s restartPolicy is set to Never. This functionality is particularly important for high-cost workloads, such as AI/ML training jobs, where rescheduling entire Pods is resource-intensive and time-consuming. By allowing containers to restart locally on certain retriable errors, this proposal supports faster recovery and better utilisation of expensive compute resources. The core idea is to enable fine-grained control via a new API, which defines how to treat specific exit codes and lays the foundation for future extensibility.

AI/ML workloads often span hundreds of Pods and are orchestrated in tightly synchronised patterns where progress depends on collective completion. In these cases, restarting a failed container in-place is far more efficient than rescheduling the entire Pod. For instance, when all Pods progress from a shared checkpoint, a retriable failure in one Pod should trigger a coordinated in-place restart rather than triggering full Pod rescheduling. The current OnFailure restart policy isn't sufficient, as it lacks the ability to differentiate between recoverable errors (which should restart in-place) and hardware or critical failures (which require Pod termination and rescheduling). Furthermore, these workloads often rely on JobSets and PodFailurePolicy, which are server-side and incompatible with client-side OnFailure policies. Thus, this enhancement aims to bridge that gap by providing smarter, more adaptable restart behavior directly at the container level.

Dynamic environment injection via Files

Enhancement: #3721

Many tools, like HashiCorp Vault, generate secrets or environment variables at runtime, typically through init containers. But injecting these into the main container environment remains messy.

By introducing FileEnvSource and FileKeySelector, users are offered a cleaner solution that defines environmental variables directly from files generated at runtime. You can use a new envFrom and env syntax that reads from paths like /env. This avoids fragile workarounds (like overriding entrypoints) or overcomplicated configmap/secret generation flows.

A good solution for that is to create a new EnvVarSource or an EnvFromSource for a container, as seen below:

envFrom:

- fileRef:

path: /env

boolean: true

- fileRef:

path: /env2

env:

- name: MY_JWT

fileKeyRef:

path: /env

key: JWTWrapping up

Kubernetes 1.34 is definitely introducing a strong wave of innovation at the Alpha level, with significant progress across resource scheduling, hardware management, security, and application compatibility. Although these features are not production-ready yet, they reflect clear priorities for the Kubernetes ecosystem, particularly around supporting modern workloads like AI/ML and improving operational resilience.

While there’s a good chance you won’t be testing these experimental features when Kubernetes 1.34 is released at the end of August 2025, these Alpha features are still worth tracking as they mature. And if you have questions about the upcoming release, other than just the Alpha updates, there is a thread open on Reddit for Kubernetes 1.34.