In May 2024, GitHub's CEO Thomas Dohmke told the world that “with AI, anyone can be a coder” and claimed that “building software will be just as simple and joyful as stacking LEGO blocks”. Dohmke isn't wrong about AI's potential to democratize coding, but there's a crucial piece missing from this narrative that every platform engineering team needs to understand.

As GenAI accelerates the speed at which software is developed, platform engineering transforms from being a nice idea to business critical. Companies without robust CI/CD pipelines, golden paths, and automated quality gates won't just struggle with AI adoption: they'll fail.

Quality is the new bottleneck

For decades, developer velocity has been the holy grail of software engineering. We've optimised for faster deployment cycles, reduced time-to-market, and increased feature throughput. The assumption was straightforward: more productive developers equals better business outcomes.

But there's a big difference between software that works, and software that can be deployed to production. AI generated code helps solve the immediate problem of getting something working, but it won’t necessarily meet your corporate coding standards, be architecturally consistent with the rest of your environment, or not include security vulnerabilities.

The DevSecOps Survey conducted by GitLab in 2024 concluded that software developers spend 21% of their time writing new code. The majority of their efforts are spent debugging, testing, reviewing, fixing security vulnerabilities, and of course, in meetings. When AI accelerates that 21%, it doesn’t just produce more code, it also generates more bugs, more broken builds, more code reviews, and more security vulnerabilities that must be addressed before it reaches production.

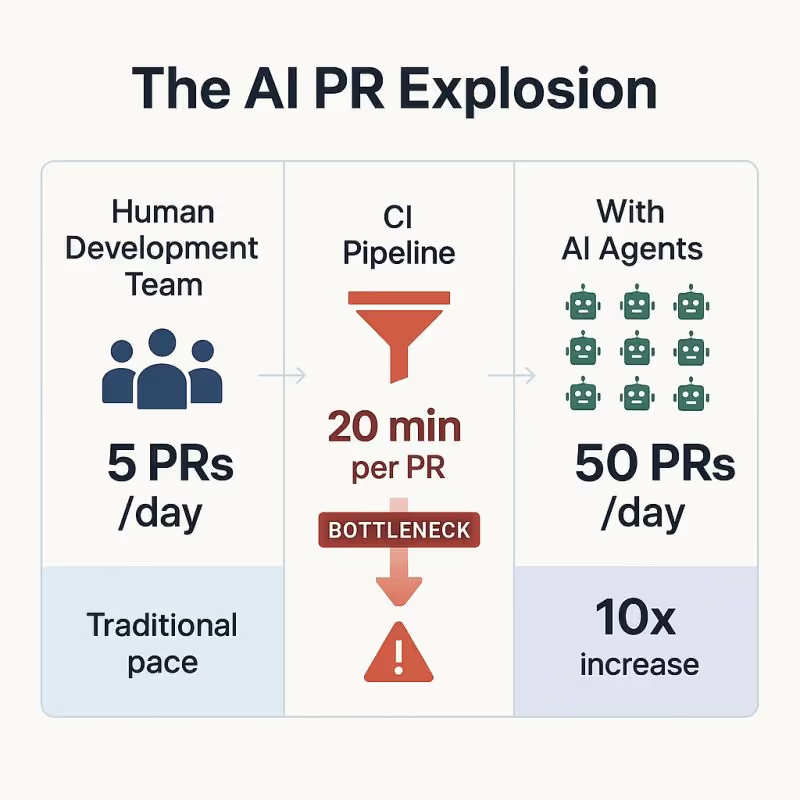

The math is brutal. Consider this recent question from Atulpriya Sharma: “If your current CI takes 20 minutes per PR, and AI agents submit 50 simultaneous PRs... that's 1000 minutes of queued work”. When working with large organisations, particularly those working in highly regulated environments, it’s not uncommon for the path-to-production to include several approval steps that take days or weeks to complete.

The traditional systems and processes that we rely on today to handle human-paced development simply can’t cope with AI-accelerated output. Quality control becomes the new dominant bottleneck in your development lifecycle, limiting your ability to harness AI's potential.

Platform engineering for the AI era

Platform engineering emerged to solve a familiar problem: how to scale development practices without losing control. When DevOps first gave teams autonomy to choose their own processes and tools, it worked brilliantly for individual teams. But as companies scaled this approach, the cracks began to show.

Teams optimised their local environments while the broader organization suffered. IT teams struggled to manage sprawling tool estates and competing development frameworks. Unable to agree on any standard approach, teams would duplicate effort by solving common problems independently. Although company standards were well-documented, they were poorly adhered to. Security and compliance teams were left scrambling to keep up with production changes.

Platform engineering solved this by creating shared foundations that teams could build on. Golden paths provide opinionated but flexible routes to production, making the right thing the easiest thing to do. Self-service capabilities let teams move fast without reinventing the wheel, while automated guardrails ensured consistency and compliance without slowing anyone down. Instead of fighting over standards, teams could focus on building features while the platform handled the underlying complexity.

The challenge we face with AI is remarkably similar. AI-generated code creates the same risks: inconsistent patterns, duplicated solutions, and governance headaches. But the platform engineering principles that helped human developers work together efficiently at scale apply just as well to managing AI-generated code.

Platforms built to handle AI agents create better developer experiences for humans too. When you build systems that can manage the volume and complexity of AI-generated code, you're also building systems that make human developers more productive and reduce friction across the board.

Core capabilities your platform needs

1. Industrialised CI/CD

Your CI/CD infrastructure needs to be built for scale and reliability. This means pipelines that can handle 10x more commits without breaking, intelligent queuing that prevents resource contention, and parallel processing that keeps builds moving fast. Think about auto-scaling build runners, optimised caching strategies that work across teams, and circuit breakers that prevent one team's broken build from affecting everyone else.

2. Developer experience & self-service

Make the right thing the easiest thing to do. Both AI and human developers thrive on having good examples to adapt to their purposes. Provide project templates that include all your security, monitoring, and deployment requirements by default. Golden paths should guide both developers and AI toward your organization's specific patterns. Self-service capabilities let teams provision resources, deploy applications, and manage configurations without tickets or waiting for other teams. Integration with existing corporate tools ensures that all relevant teams have visibility and can contribute to changes.

3. Mature golden paths

Your golden path needs to be optimised for both speed and quality, minimizing human-in-the-loop approval steps while maintaining necessary controls. This means automated style checking, static analysis for code quality, test coverage, dependency checks, vulnerability scanning and compliance checks that all happen automatically on every commit. Developers receive immediate feedback, long before a human is asked to manually review any changes.

The goal is catching issues early when they're cheap to fix, not after a senior developer has invested review time. When the change is ready for human approval, the reviewer can see what has already been checked, what passed, and what specifically needs their expertise.

4. Intelligent review processes

We can use AI tooling as a valuable tool to help improve and accelerate the code review process. The same models that help produce code can provide an initial code review to catch any obvious issues. But the real value comes from AI's ability to provide contextual guidance: explaining why a particular pattern is problematic, suggesting more performant alternatives, or identifying security vulnerabilities with specific remediation steps that developers can understand and act on.

AI can also be used to analyse the scope and complexity of changes to intelligently adapt the level of human oversight required. A simple bug fix in well-tested code might flow straight through automated checks, while changes to authentication logic automatically trigger a thorough human review. In complex codebases, intelligent routing sends complex architectural changes to senior developers while directing routine updates to appropriate reviewers based on expertise and current workload.

The goal isn't to replace human judgment, but to augment it. When a senior developer is asked to review code, they're looking at something that's already been analysed, explained, and contextualised. They can focus on architecture, business logic, and strategic decisions rather than hunting for syntax errors or basic logic flaws.

The platform engineering advantage

Organizations already investing in platform engineering are better positioned for AI adoption. The same self-service capabilities, quick-start templates, golden paths, and automated quality gates that help human teams scale work just as well for managing AI-generated code at scale. Likewise, any additional investments you make to manage AI collaboration can help create better experiences for human developers too.

Those without platform engineering will face significant challenges as AI accelerates development velocity while amplifying quality bottlenecks. The question isn't whether AI will transform software development, because it already has. The question is whether your organization has the foundations to harness that transformation without drowning in its output.

The future belongs to teams that build systems amplifying human potential rather than replacing it.