Kubernetes 1.35: 10 new Alpha features

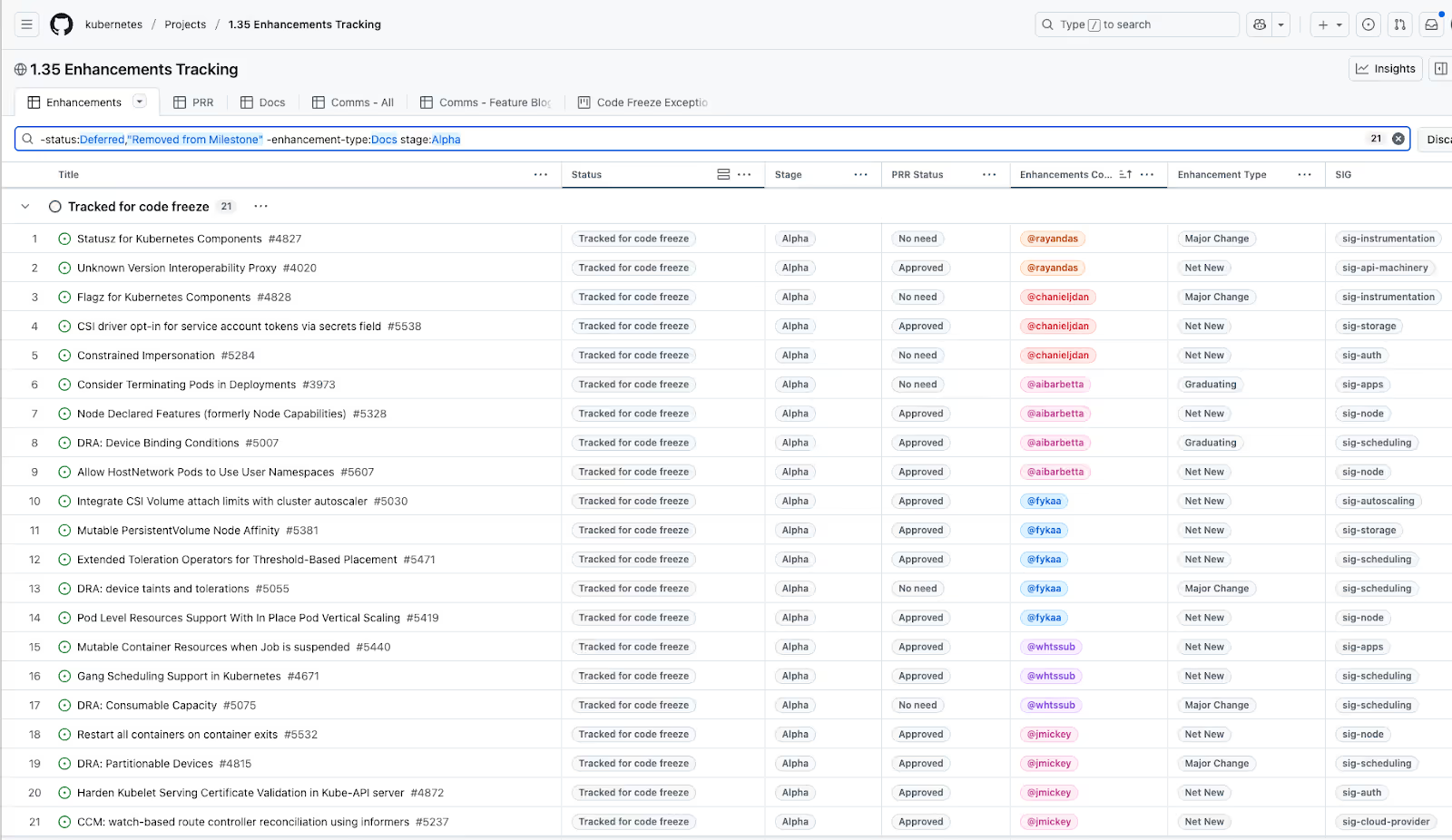

At Cloudsmith, we recently explored the entire Kubernetes 1.35 release notes in depth. This post highlights a more focused aspect: the brand-new Alpha features introduced in this version. These Alpha features are early-stage, disabled by default, and not recommended for production environments, but they certainly give a strong sense of Kubernetes' evolving direction and future capabilities. We’ll narrow it down to the top 10 most interesting new features in 1.35.

DRA advancements in v.1.35

Kubernetes continues to make significant improvements towards being the platform of choice for orchestrating AI-native workloads. 2 additional alphas features were added for Dynamic Resource Allocation (DRA) in v.1.35.

Firstly, Device Binding Conditions (Enhancement #5007) introduces BindingConditions, a mechanism that defers Pod binding until external resources (like fabric-attached GPUs or FPGAs) are confirmed ready. Unlike current scheduling, which assumes immediate readiness, this feature prevents premature binding and improves reliability for asynchronous or failure-prone resources.

Secondly, Partitionable Devices (Enhancement #4815) aims to restore the ability to dynamically partition devices (like multi-host and logical devices) within the modern DRA "structured parameters" framework, allowing vendors to advertise overlapping partitions that can be created on-demand after allocation, thus increasing resource utilisation while remaining transparent to the end-user who selects them via a ResourceClaim.

Unknown Version Interoperability Proxy

Enhancement #4020

This proposal introduces a Mixed Version Proxy (MVP) to address version skew in Kubernetes clusters during upgrades or downgrades, where API servers may temporarily serve different sets of resources.

The MVP ensures client requests for built-in resources are proxied to an API server that can serve them, preventing 404 errors, and provides a peer-aggregated discovery document by merging resource information from all peer API servers.

This merged discovery is crucial for controllers to operate correctly, avoiding issues like blocked namespace deletion or mistaken garbage collection. The MVP achieves this by adding a new handler to the API server stack that consults a cached, peer-reported aggregated discovery document to locate and proxy requests to the correct server, while the aggregated discovery endpoint (/apis) is updated to merge peer information, although this merging is not supported for the core core/v1 group (/api).

CSIDriver opt-in for SA tokens via Secrets field

Enhancement #5538

Kubernetes 1.35 introduces an opt-in mechanism for CSI drivers to receive generated service account tokens in the dedicated secrets field of the NodePublishVolumeRequest, moving them away from the current location in the volume_context. This change is motivated by security vulnerabilities (like CVEs) that occurred because volume_context is not designed for sensitive data and is often logged without sanitisation. By setting the new serviceAccountTokenInSecrets: true field in the CSIDriver spec, developers can utilise the proper channel for sensitive information, thereby improving security and eliminating the need for custom, driver-specific token sanitisation logic. The default behavior remains unchanged (tokens in volume_context) to ensure complete backward compatibility.

apiVersion: storage.k8s.io/v1

kind: CSIDriver

metadata:

name: example-csi-driver

spec:

tokenRequests:

- audience: "example.com"

expirationSeconds: 3600

# New field for opting into secrets delivery

serviceAccountTokenInSecrets: true # defaults to false

Node Declared Features (NDF)

Enhancement #5328

The proposed NDF framework introduces a standard mechanism for Kubernetes nodes to explicitly declare the availability of specific, feature-gated Kubernetes features in a new DeclaredFeatures field in Node.Status. The primary goal is to mitigate issues arising from version skew between the control plane and nodes, which can lead to pods requiring a new feature being incorrectly scheduled onto an unsupported node.

This declaration will be used by the kube-scheduler to proactively ensure pods are only placed on nodes that meet all feature requirements and by admission controllers to validate operations, ultimately streamlining cluster operations by reducing reliance on manual configurations like taints, tolerations, and complex node labeling schemes. The Kubelet will be responsible for discovering and reporting these features, which must be temporary and tied to the lifecycle of new Kubernetes features (Alpha/Beta/GA).

Integrate CSI Volume Attach Limits with Cluster Autoscaler

Enhancement #5030

Kubernetes 1.35 successfully integrates CSI Volume attach limits into the Kubernetes Cluster Autoscaler. This means the autoscaler now takes into account the maximum number of volumes a node can support (a CSI volume limit) when deciding whether to scale up the cluster to schedule new pods. The change introduces a mechanism where the autoscaler simulates a CSINode object to accurately assess these limits, ensuring that new nodes are provisioned only when a pending pod cannot be scheduled due to a volume attach limit constraint on existing nodes. This feature aims to improve the efficiency and accuracy of cluster scaling for storage-intensive workloads.

Mutable PersistentVolume Node Affinity

Enhancement #5381

This particular Alpha feature seeks to make the PersistentVolume.spec.nodeAffinity field mutable (changeable after creation). Currently, this field is fixed, which can lead to scheduling failures for stateful pods when the volume's accessibility changes, for example, due to data migration or enabling new storage features. By allowing storage providers to update the nodeAffinity field, this KEP aims to ensure the Kubernetes scheduler uses the latest accessibility requirements when placing pods, thus preventing pods from getting stuck in Pending or ContainerCreating states and significantly improving the reliability of stateful workload scheduling.

Extended Toleration Operators for Threshold-Based Placement

Enhancement #5471

Another AI-related proposal plans to extend Kubernetes Tolerations to support numeric comparison operators (like Lt and Gt) when matching Node Taints, enabling SLA-aware scheduling for clusters mixing high-SLA (on-demand) and low-SLA (spot/preemptible) nodes. This approach is superior to NodeAffinity because it offers policy orientation (nodes declare risk, pods opt-in) and crucial eviction semantics via NoExecute and tolerationSeconds for graceful pod drain when node SLA degrades.

By allowing workloads to opt-in with explicit reliability thresholds (like an SLA that goes above 95%), this change preserves the safety model of taints/tolerations while benefiting DRA and AI workloads through better cost-reliability optimisation, stage-aware placement, and resilience.

Pod Level Resources Support With In-Place Pod Vertical Scaling

Enhancement #5419

This Net New Alpha feature seeks to extend In-Place Pod Resizing (IPPR) functionality to support dynamic adjustments of Pod-level CPU and Memory resources, building upon the foundation of Pod Level Resource Specifications (KEP #2837). The motivation is to eliminate the need for pod recreation when scaling the overall resource footprint of multi-container pods, thereby improving resource utilisation and reducing operational overhead. The feature will work by allowing operators to change aggregate pod-level resource requests and limits without service disruption, but it is currently constrained to cgroupv2 environments and will be gated by the InPlacePodLevelResourcesVerticalScaling feature in its initial alpha release.

Gang Scheduling Support in Kubernetes

Enhancement #4671

The Kubernetes maintainers proposed modifying kube-scheduler to natively support gang scheduling by introducing a new core type called Workload. The Workload object allows the scheduler to recognize groups of pods that must be scheduled together for parallel applications to start and make progress, preventing resource idleness or application failure due to communication timeouts.

Gang scheduling is implemented by making the scheduler wait until all pods in a group reach the same scheduling stage; if a timeout occurs before they all reach that stage, all pods in the group release their resources. This feature aims to standardize gang scheduling support across all Kubernetes distributions, and the Workload object is designed to be extensible for future improvements like topology-aware scheduling, particularly benefiting AI training and inference use cases using various workload types.

Restart all containers on container exits

Enhancement #5532

Last but not least, this new "Restart All Containers" action is an extension to existing container restart rules. The goal is to allow a container's exit to trigger an in-place restart of the entire pod, including re-running init containers, while efficiently preserving the pod's sandbox, IP address, and network namespace. This method is faster and more resource-efficient than deleting and recreating the pod, offering significant benefits for workloads like AI/ML training that require a clean environment reset, often triggered by a watcher sidecar, to ensure predictable state and re-execute necessary setup tasks.

Wrapping up

Kubernetes 1.35 is definitely introducing a strong wave of innovation at the Alpha level, with significant progress across AI/ML workload orchestration (DRA advancements, Extended Toleration Operators, Gang Scheduling, and new container restart actions), stateful workload reliability (Mutable PersistentVolume Node Affinity and CSI Volume Attach Limits integration with Cluster Autoscaler), cluster operability/resilience (MVP and NDF), as well as resource efficiency (Pod Level Vertical Scaling and CSIDriver opt-in for SA tokens via Secrets).

While there’s a good chance you won’t be testing these experimental features when Kubernetes 1.35 is finally released in late December 2025, these Alpha features are still worth tracking as they mature. And if you have questions about the upcoming release, other than just the Alpha updates, there is a thread open on Reddit for Kubernetes 1.35.