Software is evolving from executing predefined instructions to operating with genuine autonomy. This shift is led by agentic AI, a class of artificial intelligence focused on autonomous systems that can perceive their environment, reason through problems, and execute multi-step tasks with minimal human intervention. Unlike traditional AI, agentic systems are proactive and goal-driven. They use Large Language Models (LLMs) not just to generate content, but as a reasoning "brain" to orchestrate actions and interact with the world through external tools and APIs. These LLMs can be remote APIs or running locally next to the agent.

The core of this autonomy is the agentic loop: Perception, Reasoning, Planning, Action, and Learning. This cycle produces a new type of workload that is dynamic, long-running, and non-deterministic. This is fundamentally different from the stateless microservices of traditional cloud-native architecture. Industry leaders now see agentic AI as a new computing paradigm, not just another workload to be containerized.

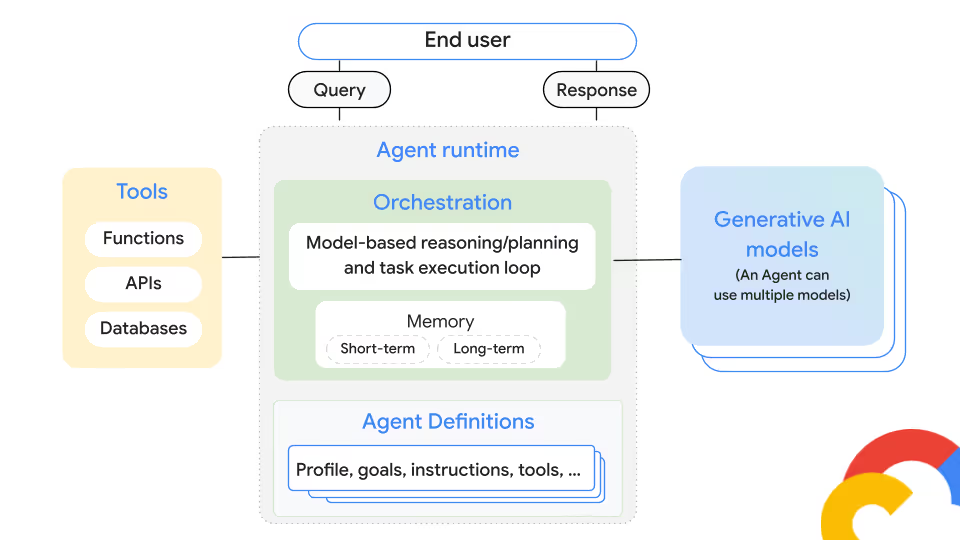

The dynamism and autonomy of agentic AI render static infrastructure models obsolete. A new foundation is needed, one that is elastic, resilient, and secure. This article provides a technical overview of how Kubernetes, applied through platform engineering principles, offers the essential building blocks for this foundation, creating a robust operating system for the next generation of intelligent software. Below is a diagram to help you understand how agents work.

The unique demands of agentic workloads

Successfully deploying agentic AI is less about the individual agent and more about orchestrating the entire end-to-end workflow in which it operates. This workflow-centric model rises to several technical challenges that platform engineers must address.

- Dynamic and ephemeral compute: Agentic workflows create unpredictable, "bursty" patterns of activity. A main agent might suddenly spawn dozens of short-lived sub-agents to handle parallel tasks. This requires a highly elastic platform that can rapidly scale resources up and down to meet sudden demand without incurring high costs or performance issues.

- Persistent state and long-term memory: Unlike typical stateless applications, agents need "memory" to maintain context and learn from past interactions. The platform must provide a durable way to manage this state, ensuring an agent's memory survives restarts, failures, or scaling events.

- Complex and long-running workflow orchestration: An agent's process is not a simple script but a complex, multi-step workflow that can run for hours or days. The platform needs robust orchestration capabilities to manage these long-running, interdependent tasks, handle failures, and ensure reliable execution from start to finish.

- Scalable data access and tool integration: Agents rely on real-time access to external tools like APIs and databases to function. The infrastructure must provide secure, low-latency, and scalable connections to these various data sources and systems, enabling the agent to observe and act upon the world.

- Security and governance for autonomous actions: Because agents can act independently, like modifying a database, security is critical. The platform must enforce strict guardrails, including fine-grained access controls and adaptive security policies, to prevent unexpected or harmful actions.

These challenges require platform engineers to take into account the trade-off between performance, cost, security, and latency. An effective platform must not only meet these five technical demands but also provide mechanisms to intelligently manage these competing concerns.

Kubernetes as the foundational layer for Agentic AI

A new, dynamic, and resilient infrastructure is needed for agentic workloads, and Kubernetes provides the ideal foundation. It acts as a robust operating system for managing the entire lifecycle of agentic applications.

Kubernetes' core architectural principles directly address the unique challenges of agentic AI

- Declarative control plane: You declare the desired state for your agents (e.g., resources and policies), and Kubernetes automatically works to make it a reality. This mirrors the goal-oriented nature of agents themselves, removing the need to script every possible action.

- Scalability and elasticity: Kubernetes can automatically scale resources up or down to handle the bursty and unpredictable compute demands of agentic workflows. This ensures efficient resource use, whether for a single task or a large-scale, multi-agent system.

- Extensibility: Kubernetes is not rigid; it can be extended with Custom Resource Definitions (CRDs) and operators. This allows you to create an "agent-native" platform where you can manage agents declaratively, effectively teaching Kubernetes to understand the specific needs of agentic AI.

- Resilience and self-healing: Kubernetes is designed to be self-healing. It automatically restarts failed containers and reschedules workloads, providing the resilient foundation necessary for long-running, mission-critical agentic tasks to execute without disruption.

- Portability and isolation: Containers serve as the perfect building block, packaging an agent's code and dependencies into a portable and isolated unit. This ensures consistency across environments and allows multiple agents to run securely on the same infrastructure.

Kubernetes primitives deep dive

While Kubernetes provides the right primitives, its practical application for agentic AI requires a nuanced understanding of its various workload controllers and resources. Choosing the correct primitive for a given agent type is critical to building a robust and efficient system. This section provides a detailed technical guide to mapping agentic workload patterns to the appropriate Kubernetes objects.

Agent State: Deployments vs. StatefulSets

The most important decision is whether an agent needs to remember things. Kubernetes Deployments are perfect for simple tools agents might need (e.g., a currency converter) and don't need persistent storage. Pods are treated as identical and disposable. StatefulSets on the other hand are great for workloads that need careful storage management. Things like databases or pub/sub systems run better inside a StatefulSet.

Agent tasks: Jobs vs. workflows

How an agent executes its tasks determines the best primitive.

- For Discrete tasks: Use a Job for a task that runs once and completes (e.g., generating a report). Use a CronJob for tasks that run on a schedule.

- For complex reasoning: An agent's multi-step thought process is best modeled as a workflow. Instead of scripting multiple Jobs, use a container-native workflow engine like Argo Workflows to manage dependencies, retries, and the flow of logic.

Implementing agent memory with PersistentVolumes

The mechanism by which pods in Kubernetes get stable storage is through the use of PersistentvolumeClaims (PVC). These PVCs then bind to a PersistentVolume (PV), which represents a piece of physical storage (e.g., a cloud disk or an NFS share).

Orchestrating multi-agent systems

Many advanced agentic applications are not single agents but systems of multiple, collaborating agents. Common patterns include a "planner" or "supervisor" agent that decomposes a problem and delegates sub-tasks to specialized "worker" agents. Kubernetes provides the building blocks to implement these patterns effectively. A multi-agent system can be modeled on Kubernetes either as a single pod or multiple distinct pods for each agent. A single pod hosting all agents can be a Single Point of Failure and limit scalability options. Instead, opt for running each agent as a separate deployment and set of pods.

Inter-agent communication with a Service Mesh: While agents can communicate through standard Kubernetes Services, this approach lacks the security, reliability, and observability required for production systems. A Service Mesh, such as Istio or Linkerd, provides a dedicated infrastructure layer to manage this communication transparently. This enables:

- Secure communication with automatic mutual TLS (mTLS) encryption for all agent-to-agent communication, ensuring data is secure in transit without any changes to the agent code.

- Authentication and authorization with the built-in capabilities of Service Mesh tools you can handle Authn and Authz outside at the network level outside the agent code using Kubernetes declarative policies.

- Reliable communication with automated retries, timeouts, and circuit breaking, making the communication fabric resilient to transient network failures.

- Observable communication with detailed metrics, logs, and distributed traces for all inter-agent traffic, providing deep visibility into how agents are interacting, identifying performance bottlenecks, and debugging complex issues. This is especially important considering the un-deterministic nature of Agents. Having deep observability data allows you to monitor what the agent is doing.

The Platform engineering blueprint for enterprise-grade agentic AI

Deploying agentic AI at scale requires a platform-centric approach. Instead of having every AI engineer become a Kubernetes expert, organizations should consider building an Internal Developer Platform (IDP) that abstracts away infrastructure complexity and defining Golden Paths for internal teams to help them deploy Agentic AI effectively.

- Internal Developer Platform (IDP): This is a self-service platform that provides AI and ML teams with curated tools, services, and automated workflows. It allows them to deploy and manage agents efficiently without needing deep knowledge of the underlying infrastructure, treating the platform as an internal product.

- "Golden Paths": These are opinionated, pre-defined workflows for common tasks, like deploying a new agent. A Golden Path is the "easy button" that encodes security, compliance, and reliability best practices directly into the development process. An example of a Golden Path could look like:

- Scaffold: A developer uses a template to create a new agent project with a standardized structure. Open source tools like Agent Development Kit (ADK) could be a great option.

- Configure: The developer is asked to provide key details like the LLM endpoint and database credentials.

- Deploy: A pre-configured CI/CD pipeline automatically builds, tests, and deploys the agent.

- Integrate: The platform automatically handles logging, monitoring, and security, making the agent production-ready by default.

Essential platform capabilities for production readiness

To support agentic AI in a production environment, an IDP must provide a set of non-negotiable, enterprise-grade capabilities that address the unique challenges of robustness, security, observability, and cost.

Robust security framework

The autonomy of agents makes security the primary concern. The platform must enforce a zero-trust model by default.

- Identity and access: Implement the principle of least privilege. Each agent should have a dedicated Kubernetes ServiceAccount linked to a Role that grants only the absolute minimum permissions needed. Over-privileged agents are a major security risk, as a compromise could lead to unauthorized actions.

- Network isolation: By default, all pods in Kubernetes can communicate. This must be restricted. Use NetworkPolicies to establish a "default-deny" stance, blocking all traffic unless it's explicitly allowed. This segmentation contains the blast radius if an agent is compromised, preventing lateral movement.

- Secrets management: Never hardcode secrets like API keys. Instead, integrate an external secrets manager like Google Cloud Secret Manager. This allows secrets to be securely and dynamically injected into agent pods at runtime, providing secure storage, automated rotation, and a clear audit trail.

Observability for non-deterministic systems

Observing agentic AI requires more than just monitoring CPU and memory. The non-deterministic nature of their LLM-driven reasoning means that platform teams and developers must be able to understand why an agent made a particular decision.

- Tracing agentic workflows with OpenTelemetry: Traditional logging is insufficient. The platform should be built around distributed tracing, using open standards like OpenTelemetry. By instrumenting the agent's code and its interactions with tools and LLMs, a complete trace can be captured for each task. This trace should include the initial prompt, each step of the agent's reasoning process (the "thought" process), every tool it decides to call, the specific parameters passed to those tools, the results it receives, and its final output. This level of detail is indispensable for debugging unexpected behavior and auditing agent actions.

- Evaluation and monitoring: Agent observability must extend beyond system health to include continuous evaluation of the agent's performance, quality, and safety. The platform should integrate with evaluation frameworks that can track key agent-specific metrics, such as:

- Task success rate: The percentage of goals completed successfully.

- Hallucination rate: The frequency of factually incorrect or unsupported claims.

- Tool-use accuracy: Whether the correct tools are being called with the correct parameters.

- Adherence to guardrails: Whether the agent is operating within its predefined constraints. The platform should monitor these metrics over time, alerting teams to performance degradation or behavioral drift, which might indicate a need for retraining or prompt refinement.

Cost management and optimization (FinOps)

Agentic workloads can be notoriously expensive, driven by frequent, token-intensive LLM calls and potential demand for specialized hardware like GPUs. The cost can also be highly volatile and unpredictable, making proactive financial governance (FinOps) a critical platform capability.

Google Kubernetes Engine: Your companion for a production-ready AI platform

While the principles of platform engineering on Kubernetes provide a universal blueprint, Google Kubernetes Engine (GKE) offers a suite of managed services and AI-specific optimizations that accelerate the journey to production-ready agentic AI. As the birthplace of Kubernetes, Google Cloud provides a deeply integrated and mature environment designed to tackle the unique challenges of AI/ML workloads at scale.

Automated and scalable compute with GKE autopilot

The dynamic and often unpredictable compute demands of agentic workflows are directly addressed by GKEs two modes of operation: Standard and Autopilot. GKE Autopilot, in particular, offers a fully managed experience that is well-suited for agentic applications. It automatically provisions and manages the underlying cluster infrastructure, allowing teams to focus on their agent's logic rather than node configuration. For agentic workloads with variable or bursty traffic, Autopilot's pay-per-pod pricing model can provide significant cost savings by ensuring you only pay for the resources your workloads request. This automated right-sizing and scaling directly meets the need for ephemeral compute without the operational overhead of manual cluster management.

Accelerated performance with specialized hardware

Agentic AI, especially for inference tasks, often requires specialized hardware. GKE provides extensive support for both GPUs and Google's own Tensor Processing Units (TPUs), which are pivotal for high-throughput inference. GKE simplifies the management of these accelerators with features like:

- Optimized provisioning: GKEs Dynamic Workload Scheduler (DWS) flex-start provisioning mode improves the ability to secure scarce GPU and TPU resources for short-duration training or inference tasks.

- Efficient utilization: GKE supports GPU Sharing, which partition a single physical GPU into multiple smaller, isolated instances. This allows for greater granularity in resource allocation, maximizing utilization and cost-effectiveness by running multiple agent models on a single GPU.

- AI-aware orchestration: Tools like Cluster Director and the GKE Inference Gateway are tailored to streamline the deployment and management of AI models across large GPU/TPU clusters, reducing cold-start times and enabling model-aware routing.

Enterprise-grade security by default

GKE is built with a secure-by-design posture, providing a robust security framework that is critical for governing autonomous agents. Key features include:

- Workload Identity Federation for GKE: This is the recommended best practice for authenticating to Google Cloud services. It allows you to assign distinct, fine-grained IAM identities to your Kubernetes service accounts, eliminating the need to manage and rotate static service account keys. This ensures that an agent running in a pod has only the permissions it needs to interact with other Google Cloud services like Vertex AI.

- Built-in Policy Enforcement: GKE natively supports Kubernetes Network Policies for network segmentation and provides additional security layers like Shielded GKE Nodes and Binary Authorization to lock down AI microservices and ensure only trusted container images are deployed.

Integrated observability and AI-centric tooling

Understanding the "why" behind an agent's actions requires deep observability. GKE clusters are natively integrated with the Google Cloud Operations Suite (formerly Stackdriver), which includes Cloud Logging, Cloud Monitoring, and Cloud Trace.

- Automated Telemetry: By default, GKE sends system logs, audit logs, and application logs to Cloud Logging, providing a centralized and persistent datastore for analysis.

- AI-specific insights: The GKE Inference Gateway provides specific observability metrics for inference requests, such as request rate, latency, and errors. This, combined with Cloud Trace and OpenTelemetry, enables the distributed tracing of an agent's entire reasoning loop.

- AI-powered operations: Google is also embedding AI into the platform itself. Gemini in Google Cloud can assist with analyzing logs to identify anomalies or help platform engineers troubleshoot cluster issues, turning observability data into actionable insights.

By combining the flexibility of open-source Kubernetes with a powerful suite of managed services, AI-optimized infrastructure, and a robust security posture, GKE provides a comprehensive platform to build, deploy, and manage enterprise-grade agentic AI applications with confidence and efficiency.

Try out agents on Google Kubernetes Engine

The Google Cloud team is offering a free trial account for our readers. Visit this link to claim a Free Google Cloud Account (Step 1) and follow the Lab in Step 2 to deploy an Agent built with ADK to GKE. The link is valid until Dec 31, 2025.

Conclusion: The future is Agent-Native

Running agentic AI applications in production is fundamentally a platform engineering challenge. While open-source Kubernetes provides the indispensable primitives, it’s the deliberate construction of a cohesive platform that unlocks their true potential. As we explored with Google Kubernetes Engine (GKE), managed platforms can significantly accelerate this journey by providing an integrated, secure, and AI-optimized foundation out of the box. By adopting a platform engineering mindset that prioritizes developer experience, robust governance, deep observability, and proactive cost management, organizations can build the resilient foundations required for this new class of autonomous software.

The evolution of this space is rapid, and the future points towards platforms that are not just running agents but are inherently "agent-aware" or agent-native. We are already seeing the emergence of specialized open-source frameworks like Kagent, which create higher-level, declarative abstractions for managing agentic infrastructure directly on Kubernetes. These tools aim to make Kubernetes a context-aware runtime that treats agents, tools, and LLMs as first-class citizens, simplifying the process of building, deploying, and governing them at scale. This trend suggests a convergence where the capabilities discussed in this article, state management, security, and orchestration, will become standardized features of an agent-native control plane.

The journey from cloud-native to agent-native has begun. By embracing the principles and architectural patterns outlined here, platform engineering teams can position their organizations at the forefront of this transformation. To get started, explore the wealth of resources available, including practical examples, tutorials and quickstarts for deploying AI on GKE. You can also grab a free copy of the Kubernetes for Developers ebook if you are looking to get started with Kubernetes today.