A decade ago, releasing software was an event. Teams booked late-night deployment windows, froze commits, and hoped release scripts would not fail halfway through. Continuous Integration and Continuous Deployment (CI/CD) changed that rhythm. As developer platforms matured, even small teams began shipping features to production several times a day.

Data analytics is reaching a similar moment. Continuous analytics is increasingly a necessity, yet turning operational data into insight still requires complex, multi-step workflows. Application databases generate data, pipelines move it elsewhere, transformations reshape it, and only then do dashboards update. Each step introduces latency and operational risk.

Streaming systems have improved freshness, but not simplicity. Many organizations rely on stacks built around tools like Apache Kafka, Apache Flink, or commercial Change Data Capture (CDC) solutions. These systems are powerful, but they demand deep expertise to build, operate, and scale reliably.

This pattern is familiar. Before CI/CD became mainstream, companies like Google had built automated build and deployment platforms, while most others remained stuck with custom adhoc solutions. What changed was not capability, but accessibility.

Analytics is moving from bespoke pipelines to default platform capability, similar to how CI/CD moved software delivery.

Why this matters now

The cost of delayed insight has changed. Many modern systems depend on understanding live behavior rather than yesterday’s data. Personalization, fraud detection, system reliability, and AI-driven features all require decisions to be made while events are still unfolding.

At the same time, the burden of maintaining complex analytics pipelines has shifted onto application teams and platform groups that are already stretched thin. What was once an acceptable tradeoff for insight is now a drag on velocity and reliability. Data freshness has become an operational concern, not just an analytical one.

This is why the problem has moved from optimization to expectation. Real-time insight is no longer a differentiator reserved for teams with large data engineering organizations. It is becoming a baseline capability that platforms are expected to provide.

Bridging OLTP and OLAP the hard way

For years, teams have bridged the gap between where data is created and where it is understood using multi-hop pipelines. Each hop adds latency, operational overhead, and failure modes.

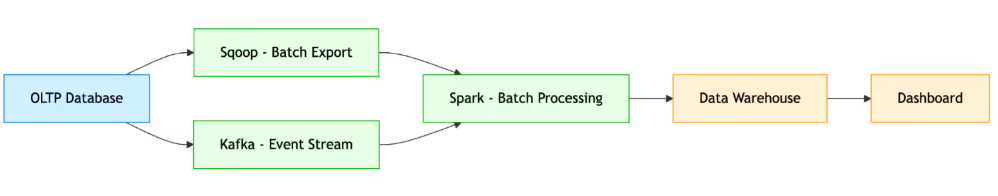

A typical flow looks like this: an application writes data to an OLTP database, change events are streamed through Apache Kafka, batch or streaming jobs transform the data, and dashboards refresh hours later. While this architecture enables analytics, it comes at the cost of complexity and fragility.

In practice, operational data often follows two parallel paths. Batch exports from OLTP systems feed analytical warehouses, while near real-time updates flow through CDC streams into stream processors such as Apache Spark. These paths eventually converge downstream, where data is reconciled, aggregated, and served to analysts. As scale grows, so does the operational burden. Latency compounds, pipelines drift out of sync, and data freshness becomes something teams must actively manage rather than assume.

This architecture made sense when compute was expensive and analytical workloads needed strict isolation from production systems. Today, however, the boundary between OLTP and Online Analytical Processing (OLAP) is increasingly under pressure.

The architectural shift: From pipelines to platforms

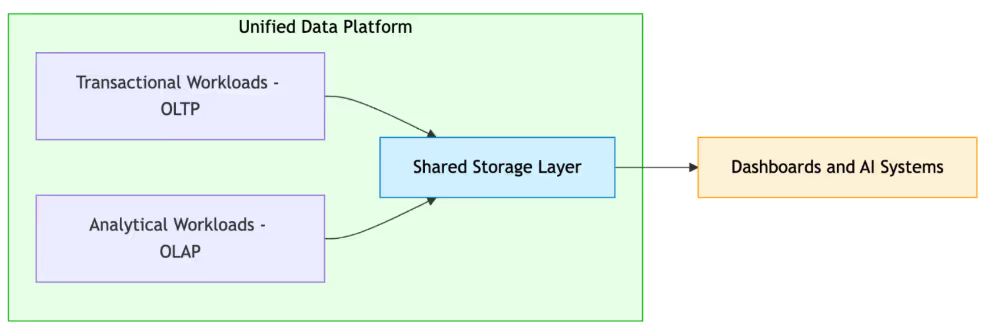

The most significant shift underway is the blurring of the boundary between OLTP and OLAP. Instead of continuously moving data out for analysis, analytical engines are moving closer to where data already lives, reducing hops, copies, and operational complexity.

This convergence is visible across the industry. Analytics platforms are integrating transactional capabilities directly into their offerings. Databricks has introduced Lakebase, providing Postgres-native services inside its lakehouse by leveraging technology from its recent acquisition of Neon, Snowflake has announced its own Postgres-based offering after hiring the team from Crunchy Data.

Earlier generations of Hybrid Transactional and Analytical Processing (HTAP) systems championed the unified model in the past. Google’s AlloyDB was designed from the ground up as a HTAP system, while pioneers like SingleStore and TiDB have long offered unified transactional and analytical capabilities.

This shift is possible because the underlying architectural lines are blurring. Modern systems blend transactional and analytical workloads over a shared storage layer that supports both types of computation. By bringing analytics closer to where data already resides, these designs either eliminate or greatly simplify the data movement that once slowed insight.

This new architecture enables data engineering to evolve. Teams can move from building brittle, one-off pipelines to providing stable, continuously updated platforms. When analytics and transactions share the same foundation, data models are no longer stale downstream copies. They can be versioned, tested, and updated continuously, similar to how CI/CD pipelines made software delivery more reliable.

By moving from brittle, multi-hop pipelines to a unified platform architecture, organizations realize several key advantages:

- Uniform security and governance: By consolidating transactional and analytical workloads, organizations can manage data access and compliance in a single place. Rather than synchronizing security policies across multiple "hops", permissions are applied once at the platform level, reducing the risk of unauthorized access or "governance leakage" during data movement.

- Operational simplicity and reliability: By eliminating the complex "multi-hop" pipelines involving tools like Kafka, Flink, or custom CDC solutions, the platform significantly reduces the number of potential failure modes. This shift allows teams to move away from maintaining brittle "glue code" and toward providing stable, continuously updated platforms.

- Trivial self-service analytics: Low-latency insights become a "default capability" of the platform. This empowers application teams to access live data without waiting for data engineering to build one-off pipelines.

The road ahead

A decade ago, continuous delivery changed how software was built. Today, continuous analytics is changing how decisions are made. Real-time visibility is no longer the privilege of a few companies with large internal platforms; it is becoming the default expectation.

The lesson from CI/CD still applies: progress happens not when technology becomes possible, but when it becomes easy. Continuous analytics is following the same path, turning what was once an advanced capability into an everyday discipline.

Unified platforms, HTAP systems, and lakehouse architectures are milestones along this journey, not its destination. They make it practical to move from delayed, disconnected insights to data that is live by design.

The direction is clear. As the gap between data creation and understanding closes, analytics begins to feel less like reporting and more like runtime, embedded, adaptive, and always on.