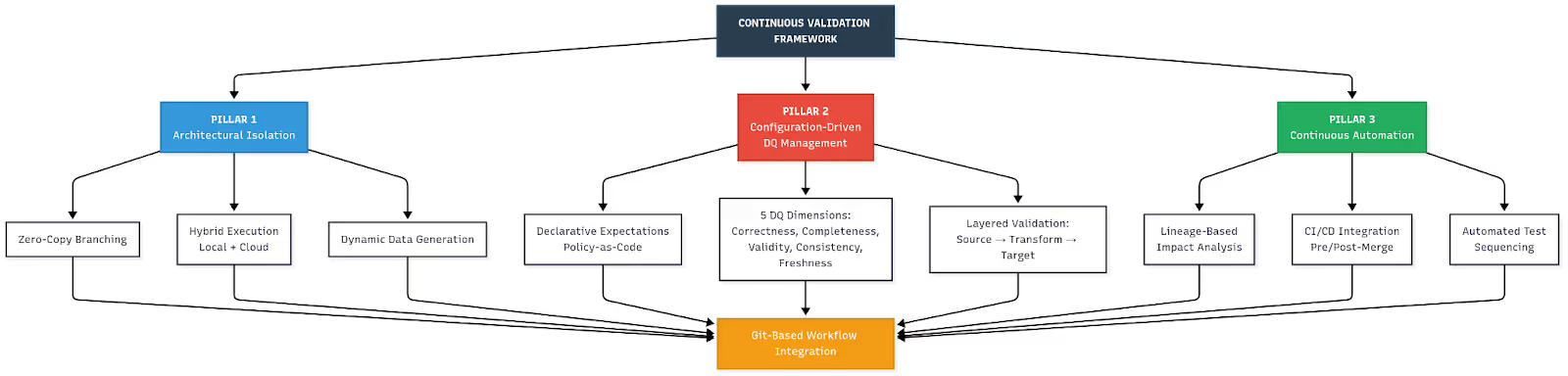

Modern data pipelines demand rigorous validation methodologies that extend beyond traditional software testing. This article introduces the Continuous Validation Framework (CVF), a comprehensive methodology for end-to-end data pipeline testing structured around three pillars: Architectural Isolation, Configuration-Driven Data Quality Management, and Continuous Automation through Lineage-Based Impact Analysis. We validate CVF through deployment in a production environment, demonstrating a 50% reduction in production incidents and 80% improvement in data quality issue detection, despite increased deployment time. The framework treats data quality as versioned code within Git-based workflows, enabling scalable and automated pipeline validation.

1. Introduction

The evolution from batch ETL to cloud-based ELT and streaming architectures has fundamentally increased data pipeline complexity. Traditional software testing, focused on code validation through unit and integration tests, proves insufficient for modern pipelines requiring comprehensive validation of data, schemas, and end-to-end processes. DataOps methodologies address this gap by synthesizing Agile principles with DevOps and platform engineering automation to improve data quality, velocity, and reliability throughout the data lifecycle.

The CVF addresses critical challenges in data pipeline testing: environment isolation at scale, declarative quality validation, and automated impact analysis of upstream changes. By integrating these components, the framework ensures data integrity is managed as code and validated continuously within CI/CD workflows.

2. Related work

Established data quality frameworks including TDQM, ISO 8000, and ISO 25012 provide generalized quality dimensions, while specialized frameworks address domain-specific requirements. However, these frameworks lack integration with modern CI/CD pipelines and automated lineage-based testing.

Data provenance research in e-Science has established foundations for tracking data origins and transformations, which CVF extends through automated impact analysis. Recent work on automated data quality verification demonstrates the feasibility of declarative testing approaches, though comprehensive frameworks integrating isolation, quality management, and automation remain limited in literature.

3. The continuous validation framework

3.1 Pillar 1: Architectural isolation

Effective pipeline testing requires reproducible, realistic environments that don't disrupt production systems. The CVF's primary innovation addresses the cost and time challenges of duplicating massive datasets for feature branches through zero-copy branching. Technologies such as LakeFS, Databricks Lakebase, and DeltaLake cloning support this approach, copying only metadata pointers rather than physical data objects, enabling instantaneous environment replication. This makes creating and destroying isolated development environments economically viable regardless of data scale.

The CVF employs a hybrid execution model: local Docker or Airflow instances provide rapid feedback for unit testing and schema validation, while cloud test environments enable comprehensive end-to-end validation under production-like conditions. Dynamic data generation on demand ensures coverage of boundary conditions and transformation rules without production data dependencies.

3.2 Pillar 2: Configuration-Driven data quality management

The CVF standardizes quality validation by decoupling checks from transformation code through declarative specifications. Data quality rules are defined externally via configuration files or rule tables as "Expectations", a Policy-as-Code architecture promoting consistency and maintainability.

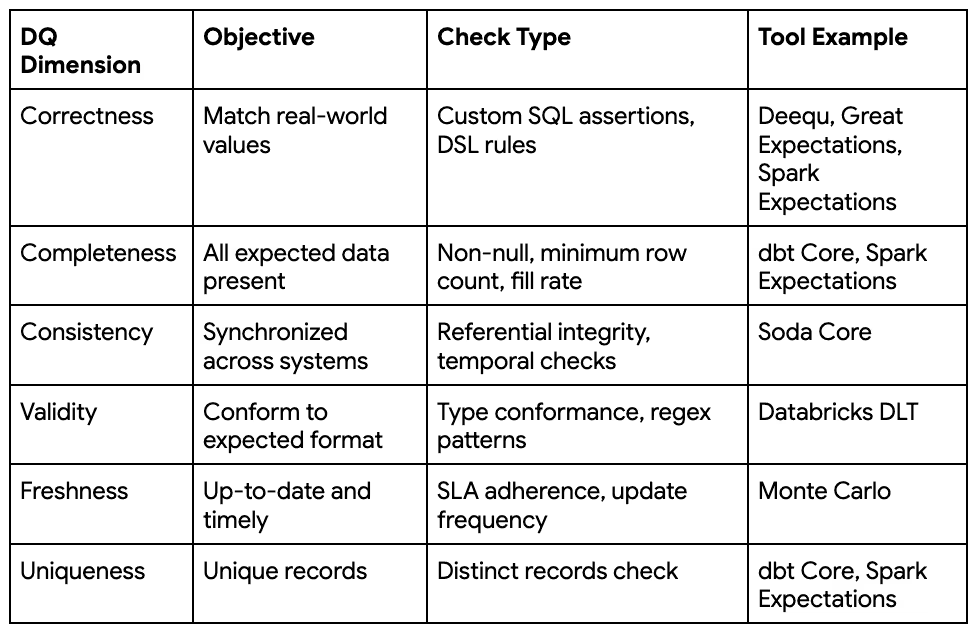

Quality expectations span five dimensions: Correctness (Accuracy), Completeness, Validity, Consistency, Freshness, and Uniqueness. Implementation follows a layered validation strategy:

- Source verification: Post-ingestion checks verify data completeness and format conformance

- Transformation rule validation: Semantic validation ensures business rules are correctly applied

- Target integrity testing: Final checks confirm no data loss, duplication, or corruption occurred

High-impact checks (uniqueness, non-null constraints) are prioritized to quickly address common issues and build organizational trust.

Table I: Configuration-Driven Data Quality Constraints

3.3 Pillar 3: Continuous automation and impact analysis

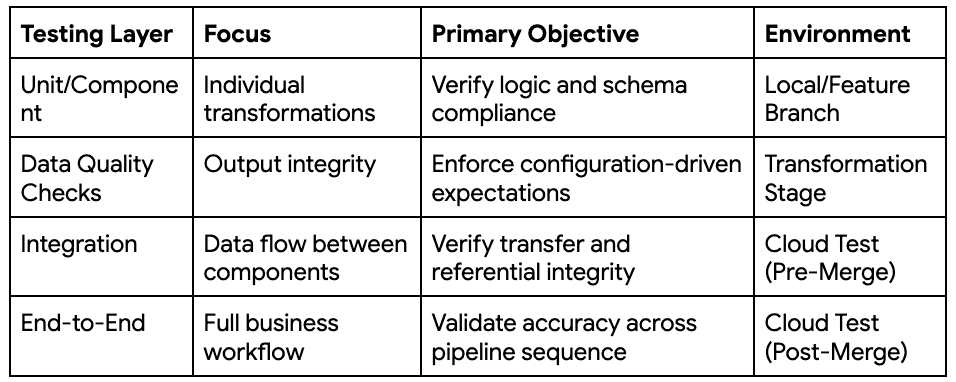

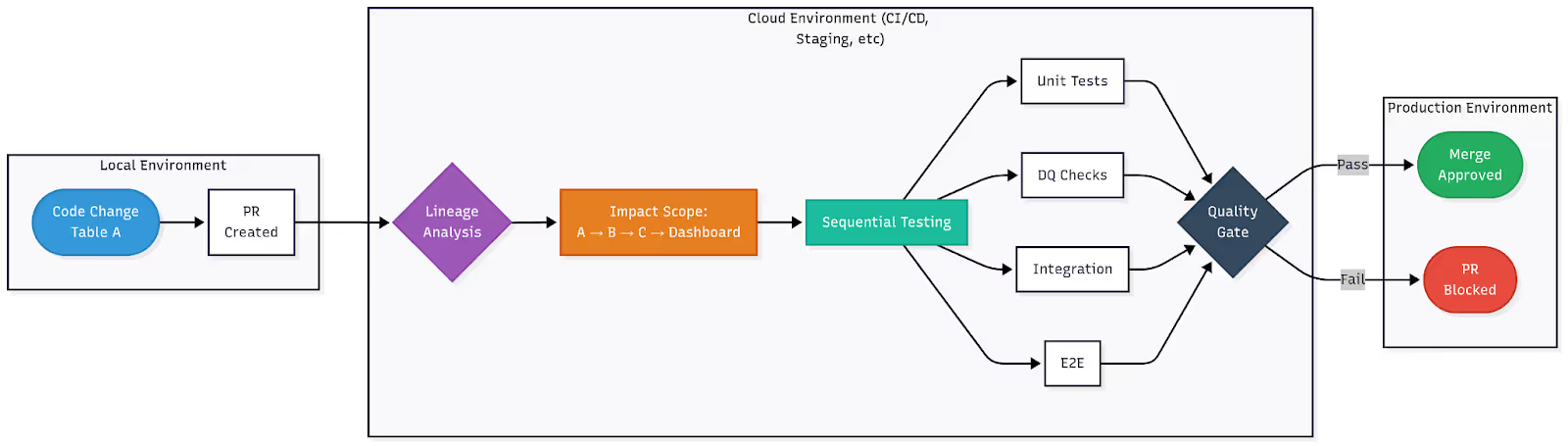

Integration with CI/CD pipelines ensures automated quality assurance before code merges. Git Pull Requests trigger automated pre-merge checks using unit tests and localized quality assertions on small datasets for rapid feedback. Comprehensive end-to-end validation executes post-merge in isolated cloud environments, maintaining mainline stability.

Automated lineage mapping constructs technical lineage graphs tracking data provenance. The CVF distinguishes between lineage (backward tracking to sources) and impact analysis (forward identification of affected downstream systems). Fine-grained, column-level lineage enables precise impact analysis for schema and data changes.

The lineage graph orchestrates targeted testing by identifying all dependent pipelines, enabling selective execution that reduces CI/CD latency while maintaining comprehensive coverage. End-to-end test execution runs dynamically generated test data through the precise sequence of affected pipelines, enforcing all declarative quality expectations.

Table II: Multi-layered testing matrix

4. Case study: Production deployment

We deployed CVF in a production data engineering environment supporting analytics and machine learning pipelines. Pre-CVF state: The primary mechanism for understanding code change impact was production deployment, resulting in significant engineering time devoted to incident resolution rather than feature development.

Implementation: CVF integration into PR and deployment workflows included:

- Feature branch isolation with zero-copy cloning

- Automated DQ checks in Git PR validation

- Lineage-based impact analysis for targeted testing

- Synthetic data generation for test coverage

Results:

- Production incidents reduced by 52% (from average 6.2 to 3.0 incidents per deployment cycle)

- Data quality issue detection improved by 78% before production deployment

- Engineering time allocation: Bug resolution decreased from 38% to 18% of engineering hours; feature development increased proportionally

- Trade-off: Deployment cycle time increased by 12% (from 47 to 53 minutes average)

- Confidence metric: Data consumer satisfaction scores improved from 6.8 to 8.4 (10-point scale)

Limitations observed: Synthetic data generation for unit testing could not replicate all production edge cases, particularly for complex business logic dependent on rare data patterns. Approximately 22% of pre-CVF production issues involved scenarios not covered by synthetic test data.

5. Discussion

The CVF demonstrates that treating data quality as code within automated CI/CD workflows significantly improves pipeline reliability. The 52% reduction in production incidents validates the framework's effectiveness despite modest deployment time increases. The 78% improvement in early quality issue detection shifts validation left in the development lifecycle, enabling faster overall iteration when accounting for reduced incident resolution time.

Key success factors: Zero-copy branching proved essential for economic feasibility at scale. Configuration-driven quality checks enabled rapid expansion of test coverage without code modifications. Automated lineage-based impact analysis ensured comprehensive downstream validation without exhaustive manual testing.

Limitations: The framework requires organizational investment in tooling infrastructure and cultural adoption of DataOps practices. Synthetic data limitations necessitate complementary production monitoring. Smaller teams may find the initial setup overhead prohibitive, though benefits scale with pipeline complexity.

6. Conclusion

The Continuous Validation Framework establishes a systematic, automated approach to end-to-end data pipeline testing. By integrating architectural isolation through zero-copy branching, declarative data quality management, and lineage-based continuous automation, CVF addresses the complexity of modern data ecosystems. Production deployment validates the framework's effectiveness, demonstrating substantial improvements in reliability and quality detection with acceptable trade-offs in deployment velocity. Future work should address synthetic data limitations through hybrid approaches combining generated and production-sampled datasets, and explore AI-driven test case generation for comprehensive coverage.

7. Statements and declarations

Ethical Approval

NA

Author contribution

Niruta Talwekar: Lead Writer, Researcher

Ashok Singamaneni: Co-Writer, Researcher and Reviewer

Funding

No Funding Received

Availability of data and materials

Data Proprietary of the company and used in articles as anonymized.

References

- Kleppmann, M. (2017). Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems. O'Reilly Media.

- Batini, C., & Scannapieco, M. (2016). Data and Information Quality: Dimensions, Principles and Techniques. Springer International Publishing.

- Schelter, S., Lange, D., Schmidt, P., Celikel, M., Biessmann, F., & Grafberger, A. (2018). Automating large-scale data quality verification. Proceedings of the VLDB Endowment, 11(12), 1781-1794.

- Buneman, P., Khanna, S., & Wang-Chiew, T. (2001). Why and where: A characterization of data provenance. International Conference on Database Theory, 316-330.

- Simmhan, Y. L., Plale, B., & Gannon, D. (2005). A survey of data provenance in e-science. ACM SIGMOD Record, 34(3), 31-36.

- Davidson, S. B., & Freire, J. (2008). Provenance and scientific workflows: challenges and opportunities. Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data, 1345-1350.

- Halevy, A., Rajaraman, A., & Ordille, J. (2006). Data integration: The teenage years. Proceedings of the 32nd International Conference on Very Large Data Bases, 9-16.

- Humble, J., & Farley, D. (2010). Continuous Delivery: Reliable Software Releases through Build, Test, and Deployment Automation. Addison-Wesley Professional.

- Bass, L., Weber, I., & Zhu, L. (2015). DevOps: A Software Architect's Perspective. Addison-Wesley Professional.

- Reis, J., & Housley, M. (2022). Fundamentals of Data Engineering: Plan and Build Robust Data Systems. O'Reilly Media.

- Breck, E., Cai, S., Nielsen, E., Salib, M., & Sculley, D. (2017). The ML test score: A rubric for ML production readiness and technical debt reduction. IEEE Big Data, 1123-1132.

- Claude for English Enhancement